[centos@k8s-01 ~]$ kubectl proxy --help Creates a proxy server or application-level gateway between localhost and the Kubernetes API Server. It also allows serving static content over specified HTTP path. All incoming data enters through one port and gets forwarded to the remote kubernetes API Server port, except for the path matching the static content path. Examples: # To proxy all of the kubernetes api and nothing else, use: $ kubectl proxy --api-prefix=/ # To proxy only part of the kubernetes api and also some static files: $ kubectl proxy --www=/my/files --www-prefix=/static/ --api-prefix=/api/ # The above lets you 'curl localhost:8001/api/v1/pods'. # To proxy the entire kubernetes api at a different root, use: $ kubectl proxy --api-prefix=/custom/ # The above lets you 'curl localhost:8001/custom/api/v1/pods' # Run a proxy to kubernetes apiserver on port 8011, serving static content from ./local/www/ kubectl proxy --port=8011 --www=./local/www/ # Run a proxy to kubernetes apiserver on an arbitrary local port. # The chosen port for the server will be output to stdout. kubectl proxy --port=0 # Run a proxy to kubernetes apiserver, changing the api prefix to k8s-api # This makes e.g. the pods api available at localhost:8001/k8s-api/v1/pods/ kubectl proxy --api-prefix=/k8s-api Options: --accept-hosts='^localhost$,^127\.0\.0\.1$,^\[::1\]$': Regular expression for hosts that the proxy should accept. --accept-paths='^.*': Regular expression for paths that the proxy should accept. --address='127.0.0.1': The IP address on which to serve on. --api-prefix='/': Prefix to serve the proxied API under. --disable-filter=false: If true, disable request filtering in the proxy. This is dangerous, and can leave you vulnerable to XSRF attacks, when used with an accessible port. --keepalive=0s: keepalive specifies the keep-alive period for an active network connection. Set to 0 to disable keepalive. -p, --port=8001: The port on which to run the proxy. Set to 0 to pick a random port. --reject-methods='^$': Regular expression for HTTP methods that the proxy should reject (example --reject-methods='POST,PUT,PATCH'). --reject-paths='^/api/.*/pods/.*/exec,^/api/.*/pods/.*/attach': Regular expression for paths that the proxy should reject. Paths specified here will be rejected even accepted by --accept-paths. -u, --unix-socket='': Unix socket on which to run the proxy. -w, --www='': Also serve static files from the given directory under the specified prefix. -P, --www-prefix='/static/': Prefix to serve static files under, if static file directory is specified. Usage: kubectl proxy [--port=PORT] [--www=static-dir] [--www-prefix=prefix] [--api-prefix=prefix] [options] Use "kubectl options" for a list of global command-line options (applies to all commands). [centos@k8s-01 ~]$

下载Dashboard组件编排文件

https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta4/aio/deploy/recommended.yaml [centos@k8s-01 ~]$ curl -O https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta4/aio/deploy/recommended.yaml % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 100 7059 100 7059 0 0 43862 0 --:--:-- --:--:-- --:--:-- 44118 [centos@k8s-01 ~]$ [centos@k8s-01 ~]$ kubectl apply -f recommended.yaml namespace/kubernetes-dashboard created serviceaccount/kubernetes-dashboard created service/kubernetes-dashboard created secret/kubernetes-dashboard-certs created secret/kubernetes-dashboard-csrf created secret/kubernetes-dashboard-key-holder created configmap/kubernetes-dashboard-settings created role.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created deployment.apps/kubernetes-dashboard created service/dashboard-metrics-scraper created deployment.apps/dashboard-metrics-scraper created [centos@k8s-01 ~]$

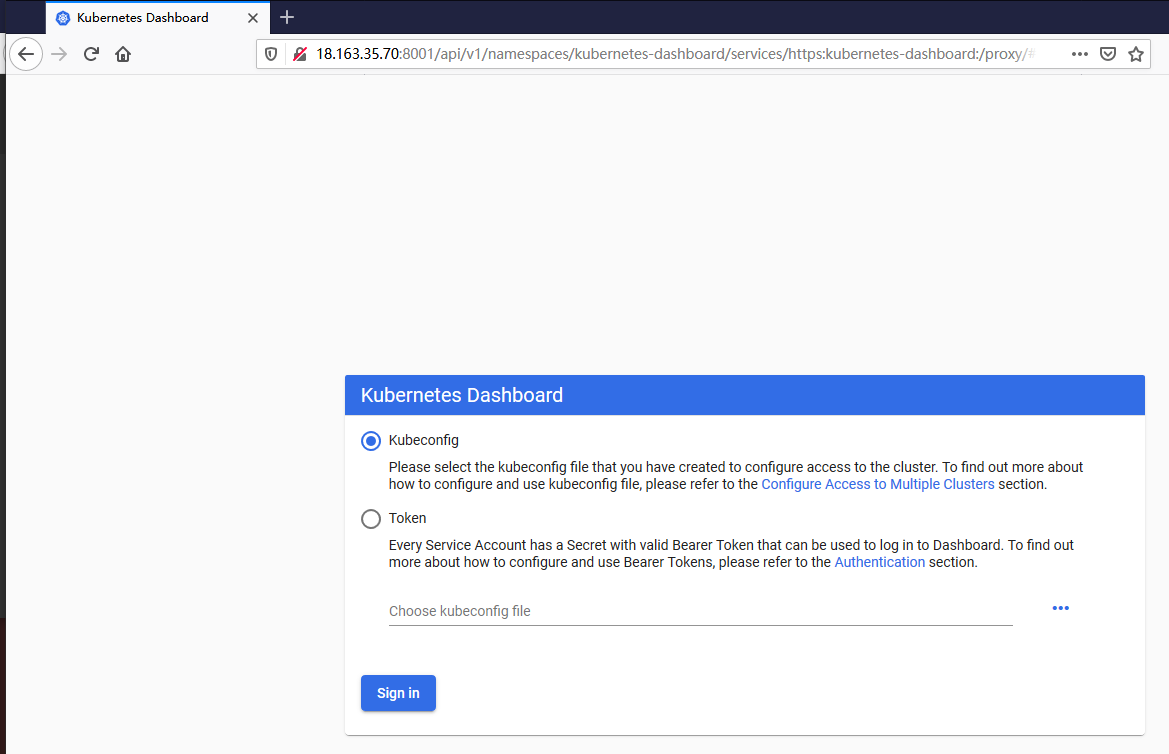

使用命令行代理工具Proxy以访问Web控制台

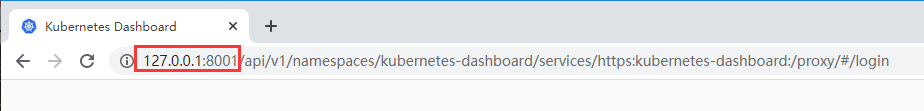

默认访问URL地址(服务监听 127.0.0.1:8001)

http://localhost:8001/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/

将端口监听在0.0.0.0以提供外部访问

nohup kubectl proxy --address='0.0.0.0' --port=8001 --accept-hosts='^*$' &

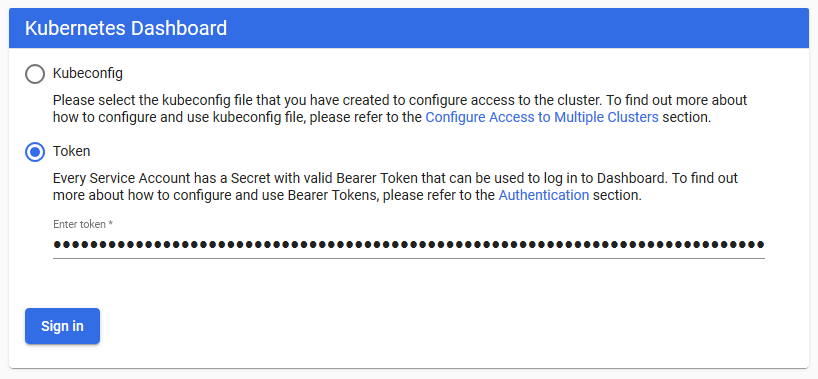

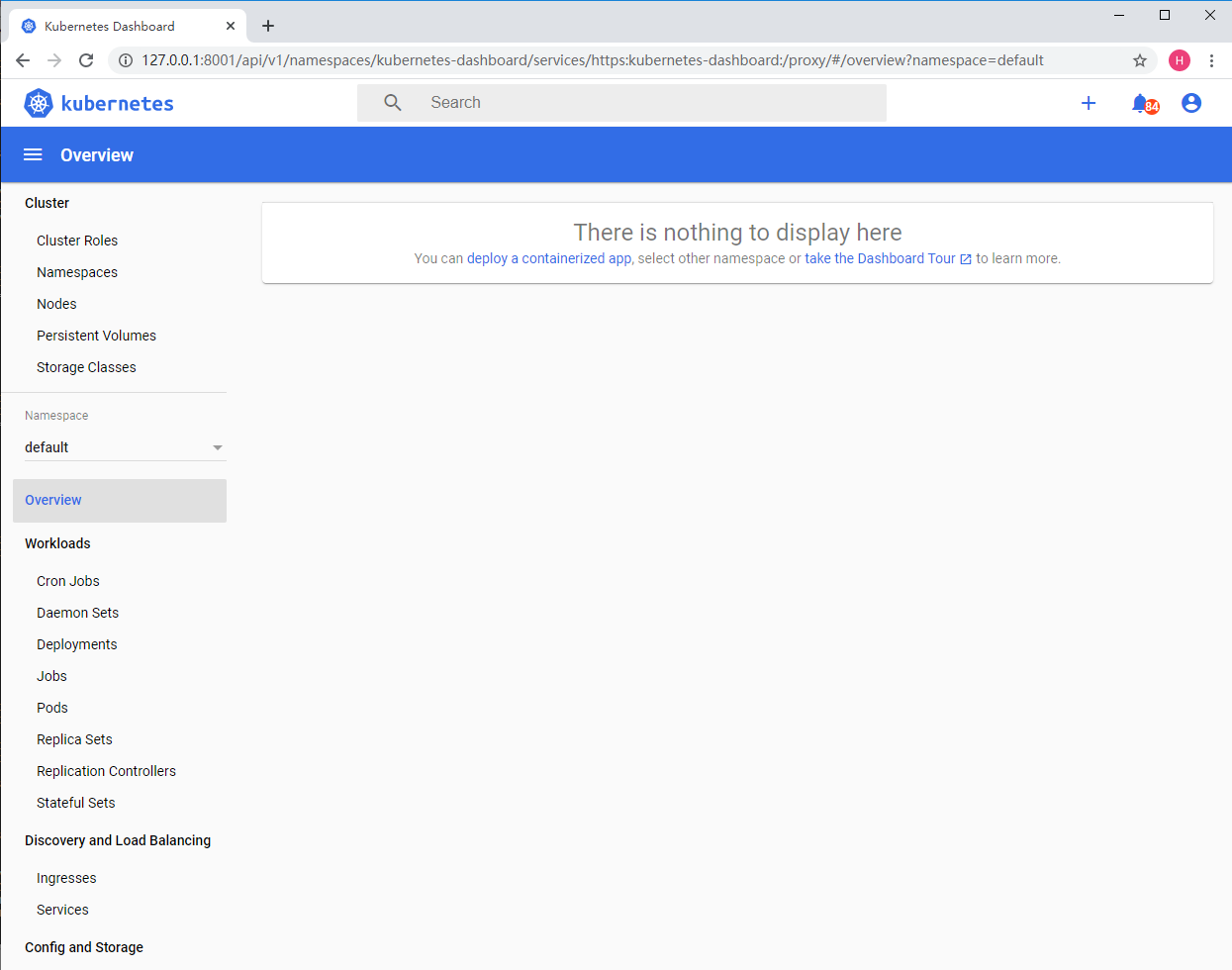

访问页面

创建验证令牌

[centos@k8s-01 ~]$ vi dashboard-adminuser.yaml apiVersion: v1 kind: ServiceAccount metadata: name: admin-user namespace: kubernetes-dashboard apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: admin-user roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: admin-user namespace: kubernetes-dashboard [centos@k8s-01 ~]$ kubectl apply -f dashboard-adminuser.yaml clusterrolebinding.rbac.authorization.k8s.io/admin-user created [centos@k8s-01 ~]$

查找生成的令牌信息

[centos@k8s-01 ~]$ kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep admin-user | awk '{print $1}') Name: default-token-qmwrz Namespace: kubernetes-dashboard Labels: <none> Annotations: kubernetes.io/service-account.name: default kubernetes.io/service-account.uid: 80e30596-8d5a-423e-b980-6444f11f42ae Type: kubernetes.io/service-account-token Data ==== ca.crt: 1025 bytes namespace: 20 bytes token: eyJhbGciOiJSUzI1NiIsImtpZCI6IjkwcDA3TnY5TG5NQzQ2eTJ4bXNOM0ctNlpnc1Ezcjl0aXdrcVp0R01LdEEifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkZWZhdWx0LXRva2VuLXFtd3J6Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImRlZmF1bHQiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI4MGUzMDU5Ni04ZDVhLTQyM2UtYjk4MC02NDQ0ZjExZjQyYWUiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6ZGVmYXVsdCJ9.gS9XEJpbm1LEU4lnWnLsnheQSw2-AWYLzzURAmiylAC3lp0eFhXqXApKhWY4jNQPyslMVsXzsUwXKcIoTAEx44MHd29kW7v3RmTul2o3imA3BlVuu5O0vZHaovXGrwar3UDfx9qZfqB4O2arjHTxvNJ5JXsY8ZsPIpCo4ZAF6cZnsANcTf_d2oajZKt8GruFtMMH6to4z-7yAS7r06gUX4WxQUjir3lPFB--_TBdqWamvK97EmhpGndWVUYZsdkd9649SFQM9k31ht2-3ZpcZVgYU0lX_WswIOiEJjhrQnrxPainvdIGQZyrpyG-zbqvTWSbP32JPUWtgLxM-92OaA Name: kubernetes-dashboard-certs Namespace: kubernetes-dashboard Labels: k8s-app=kubernetes-dashboard Annotations: Type: Opaque Data ==== Name: kubernetes-dashboard-csrf Namespace: kubernetes-dashboard Labels: k8s-app=kubernetes-dashboard Annotations: Type: Opaque Data ==== csrf: 256 bytes Name: kubernetes-dashboard-key-holder Namespace: kubernetes-dashboard Labels: <none> Annotations: <none> Type: Opaque Data ==== pub: 459 bytes priv: 1679 bytes Name: kubernetes-dashboard-token-j49z9 Namespace: kubernetes-dashboard Labels: <none> Annotations: kubernetes.io/service-account.name: kubernetes-dashboard kubernetes.io/service-account.uid: 5a61cd25-243e-405a-8dc5-70e0c005a6a1 Type: kubernetes.io/service-account-token Data ==== token: eyJhbGciOiJSUzI1NiIsImtpZCI6IjkwcDA3TnY5TG5NQzQ2eTJ4bXNOM0ctNlpnc1Ezcjl0aXdrcVp0R01LdEEifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi1qNDl6OSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjVhNjFjZDI1LTI0M2UtNDA1YS04ZGM1LTcwZTBjMDA1YTZhMSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.BUg5yeCa9e0R1zC1DJWMSk8ZhskqeMm-ygOnn-sP9evcZEam5yQlthpqxOG5aoFMhaippnOpGcvNnCt0GwyNMRwKbBLG-6DgDPVpgoF5LfY3V1sun6DcFuBTBLdXdBM5iuVlv1c0Mhs8PvyAJenzCshrd4JAUgVzsUK8umWZf_cUlLqCCvimGlYOzpK-cMUepVanegxpiYOZrmEZZYzztpRIYTX9wWE1jzSUDndebbuJIcKILsMa25lSvFjBJDgBvwfVyQ1gRt9AOZu5oWhqgtRc3HJbJv5bAv5p_laoVuJLdiW2k2ZQZp07ZfeBAxz5Lmg-56icjOEaYr_AcdMu5g ca.crt: 1025 bytes namespace: 20 bytes [centos@k8s-01 ~]$

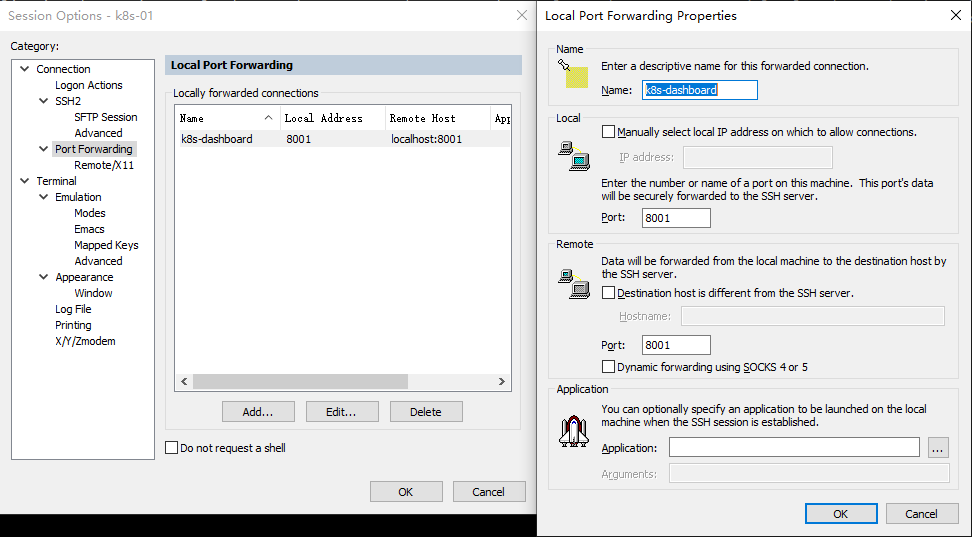

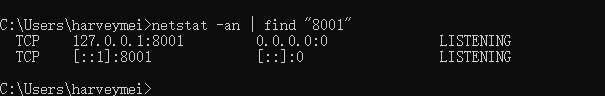

配置SecureCRT端口转发

主要组件服务配置文件

[root@k8s-01 ~]# ls /etc/kubernetes/manifests/ etcd.yaml kube-apiserver.yaml kube-controller-manager.yaml kube-scheduler.yaml [root@k8s-01 ~]#

etcd.yaml

[root@k8s-01 ~]# cat /etc/kubernetes/manifests/etcd.yaml apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: component: etcd tier: control-plane name: etcd namespace: kube-system spec: containers: - command: - etcd - --advertise-client-urls=https://172.31.43.3:2379 - --cert-file=/etc/kubernetes/pki/etcd/server.crt - --client-cert-auth=true - --data-dir=/var/lib/etcd - --initial-advertise-peer-urls=https://172.31.43.3:2380 - --initial-cluster=k8s-01=https://172.31.43.3:2380 - --key-file=/etc/kubernetes/pki/etcd/server.key - --listen-client-urls=https://127.0.0.1:2379,https://172.31.43.3:2379 - --listen-metrics-urls=http://127.0.0.1:2381 - --listen-peer-urls=https://172.31.43.3:2380 - --name=k8s-01 - --peer-cert-file=/etc/kubernetes/pki/etcd/peer.crt - --peer-client-cert-auth=true - --peer-key-file=/etc/kubernetes/pki/etcd/peer.key - --peer-trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt - --snapshot-count=10000 - --trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt image: k8s.gcr.io/etcd:3.4.3-0 imagePullPolicy: IfNotPresent livenessProbe: failureThreshold: 8 httpGet: host: 127.0.0.1 path: /health port: 2381 scheme: HTTP initialDelaySeconds: 15 timeoutSeconds: 15 name: etcd resources: {} volumeMounts: - mountPath: /var/lib/etcd name: etcd-data - mountPath: /etc/kubernetes/pki/etcd name: etcd-certs hostNetwork: true priorityClassName: system-cluster-critical volumes: - hostPath: path: /etc/kubernetes/pki/etcd type: DirectoryOrCreate name: etcd-certs - hostPath: path: /var/lib/etcd type: DirectoryOrCreate name: etcd-data status: {} [root@k8s-01 ~]#

kube-apiserver.yaml

[root@k8s-01 ~]# cat /etc/kubernetes/manifests/kube-apiserver.yaml apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: component: kube-apiserver tier: control-plane name: kube-apiserver namespace: kube-system spec: containers: - command: - kube-apiserver - --advertise-address=172.31.43.3 - --allow-privileged=true - --authorization-mode=Node,RBAC - --client-ca-file=/etc/kubernetes/pki/ca.crt - --enable-admission-plugins=NodeRestriction - --enable-bootstrap-token-auth=true - --etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt - --etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt - --etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key - --etcd-servers=https://127.0.0.1:2379 - --insecure-port=0 - --kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.crt - --kubelet-client-key=/etc/kubernetes/pki/apiserver-kubelet-client.key - --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname - --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt - --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client.key - --requestheader-allowed-names=front-proxy-client - --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt - --requestheader-extra-headers-prefix=X-Remote-Extra- - --requestheader-group-headers=X-Remote-Group - --requestheader-username-headers=X-Remote-User - --secure-port=6443 - --service-account-key-file=/etc/kubernetes/pki/sa.pub - --service-cluster-ip-range=10.96.0.0/12 - --tls-cert-file=/etc/kubernetes/pki/apiserver.crt - --tls-private-key-file=/etc/kubernetes/pki/apiserver.key image: k8s.gcr.io/kube-apiserver:v1.17.3 imagePullPolicy: IfNotPresent livenessProbe: failureThreshold: 8 httpGet: host: 172.31.43.3 path: /healthz port: 6443 scheme: HTTPS initialDelaySeconds: 15 timeoutSeconds: 15 name: kube-apiserver resources: requests: cpu: 250m volumeMounts: - mountPath: /etc/ssl/certs name: ca-certs readOnly: true - mountPath: /etc/pki name: etc-pki readOnly: true - mountPath: /etc/kubernetes/pki name: k8s-certs readOnly: true hostNetwork: true priorityClassName: system-cluster-critical volumes: - hostPath: path: /etc/ssl/certs type: DirectoryOrCreate name: ca-certs - hostPath: path: /etc/pki type: DirectoryOrCreate name: etc-pki - hostPath: path: /etc/kubernetes/pki type: DirectoryOrCreate name: k8s-certs status: {} [root@k8s-01 ~]#

kube-controller-manager.yaml

[root@k8s-01 ~]# cat /etc/kubernetes/manifests/kube-controller-manager.yaml apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: component: kube-controller-manager tier: control-plane name: kube-controller-manager namespace: kube-system spec: containers: - command: - kube-controller-manager - --allocate-node-cidrs=true - --authentication-kubeconfig=/etc/kubernetes/controller-manager.conf - --authorization-kubeconfig=/etc/kubernetes/controller-manager.conf - --bind-address=127.0.0.1 - --client-ca-file=/etc/kubernetes/pki/ca.crt - --cluster-cidr=10.244.0.0/16 - --cluster-signing-cert-file=/etc/kubernetes/pki/ca.crt - --cluster-signing-key-file=/etc/kubernetes/pki/ca.key - --controllers=*,bootstrapsigner,tokencleaner - --kubeconfig=/etc/kubernetes/controller-manager.conf - --leader-elect=true - --node-cidr-mask-size=24 - --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt - --root-ca-file=/etc/kubernetes/pki/ca.crt - --service-account-private-key-file=/etc/kubernetes/pki/sa.key - --service-cluster-ip-range=10.96.0.0/12 - --use-service-account-credentials=true image: k8s.gcr.io/kube-controller-manager:v1.17.3 imagePullPolicy: IfNotPresent livenessProbe: failureThreshold: 8 httpGet: host: 127.0.0.1 path: /healthz port: 10257 scheme: HTTPS initialDelaySeconds: 15 timeoutSeconds: 15 name: kube-controller-manager resources: requests: cpu: 200m volumeMounts: - mountPath: /etc/ssl/certs name: ca-certs readOnly: true - mountPath: /etc/pki name: etc-pki readOnly: true - mountPath: /usr/libexec/kubernetes/kubelet-plugins/volume/exec name: flexvolume-dir - mountPath: /etc/kubernetes/pki name: k8s-certs readOnly: true - mountPath: /etc/kubernetes/controller-manager.conf name: kubeconfig readOnly: true hostNetwork: true priorityClassName: system-cluster-critical volumes: - hostPath: path: /etc/ssl/certs type: DirectoryOrCreate name: ca-certs - hostPath: path: /etc/pki type: DirectoryOrCreate name: etc-pki - hostPath: path: /usr/libexec/kubernetes/kubelet-plugins/volume/exec type: DirectoryOrCreate name: flexvolume-dir - hostPath: path: /etc/kubernetes/pki type: DirectoryOrCreate name: k8s-certs - hostPath: path: /etc/kubernetes/controller-manager.conf type: FileOrCreate name: kubeconfig status: {} [root@k8s-01 ~]#

kube-scheduler.yaml

[root@k8s-01 ~]# cat /etc/kubernetes/manifests/kube-scheduler.yaml apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: component: kube-scheduler tier: control-plane name: kube-scheduler namespace: kube-system spec: containers: - command: - kube-scheduler - --authentication-kubeconfig=/etc/kubernetes/scheduler.conf - --authorization-kubeconfig=/etc/kubernetes/scheduler.conf - --bind-address=127.0.0.1 - --kubeconfig=/etc/kubernetes/scheduler.conf - --leader-elect=true image: k8s.gcr.io/kube-scheduler:v1.17.3 imagePullPolicy: IfNotPresent livenessProbe: failureThreshold: 8 httpGet: host: 127.0.0.1 path: /healthz port: 10259 scheme: HTTPS initialDelaySeconds: 15 timeoutSeconds: 15 name: kube-scheduler resources: requests: cpu: 100m volumeMounts: - mountPath: /etc/kubernetes/scheduler.conf name: kubeconfig readOnly: true hostNetwork: true priorityClassName: system-cluster-critical volumes: - hostPath: path: /etc/kubernetes/scheduler.conf type: FileOrCreate name: kubeconfig status: {} [root@k8s-01 ~]#

[root@k8s-01 ~]# cat cluster_initialized.txt [init] Using Kubernetes version: v1.17.3 [preflight] Running pre-flight checks [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Starting the kubelet [certs] Using certificateDir folder "/etc/kubernetes/pki" [certs] Generating "ca" certificate and key [certs] Generating "apiserver" certificate and key [certs] apiserver serving cert is signed for DNS names [k8s-01 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 172.31.43.3] [certs] Generating "apiserver-kubelet-client" certificate and key [certs] Generating "front-proxy-ca" certificate and key [certs] Generating "front-proxy-client" certificate and key [certs] Generating "etcd/ca" certificate and key [certs] Generating "etcd/server" certificate and key [certs] etcd/server serving cert is signed for DNS names [k8s-01 localhost] and IPs [172.31.43.3 127.0.0.1 ::1] [certs] Generating "etcd/peer" certificate and key [certs] etcd/peer serving cert is signed for DNS names [k8s-01 localhost] and IPs [172.31.43.3 127.0.0.1 ::1] [certs] Generating "etcd/healthcheck-client" certificate and key [certs] Generating "apiserver-etcd-client" certificate and key [certs] Generating "sa" key and public key [kubeconfig] Using kubeconfig folder "/etc/kubernetes" [kubeconfig] Writing "admin.conf" kubeconfig file [kubeconfig] Writing "kubelet.conf" kubeconfig file [kubeconfig] Writing "controller-manager.conf" kubeconfig file [kubeconfig] Writing "scheduler.conf" kubeconfig file [control-plane] Using manifest folder "/etc/kubernetes/manifests" [control-plane] Creating static Pod manifest for "kube-apiserver" [control-plane] Creating static Pod manifest for "kube-controller-manager" [control-plane] Creating static Pod manifest for "kube-scheduler" [etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests" [wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s [apiclient] All control plane components are healthy after 15.004178 seconds [upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config-1.17" in namespace kube-system with the configuration for the kubelets in the cluster [upload-certs] Skipping phase. Please see --upload-certs [mark-control-plane] Marking the node k8s-01 as control-plane by adding the label "node-role.kubernetes.io/master=''" [mark-control-plane] Marking the node k8s-01 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule] [bootstrap-token] Using token: 1jhsop.wiy4qe0tfqye80lp [bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles [bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace [kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ Then you can join any number of worker nodes by running the following on each as root: kubeadm join 172.31.43.3:6443 --token 1jhsop.wiy4qe0tfqye80lp \ --discovery-token-ca-cert-hash sha256:63cf674da8de45ad1482fa70fb685734e9931819021f62f5ae7a078bba601bfc

主机列表

Ansible 18.163.102.197/172.31.34.153 k8s-01 18.163.35.70/172.31.43.3 k8s-02 18.162.148.167/172.31.37.84 k8s-03 18.163.103.104/172.31.37.22

Amazon EC2主机默认禁用root登录及密码验证的处理

sudo sed -i 's/^\#PermitRootLogin yes/PermitRootLogin yes/' /etc/ssh/sshd_config sudo sed -i 's/^PasswordAuthentication no/PasswordAuthentication yes/' /etc/ssh/sshd_config sudo systemctl restart sshd

查看本地主机Ansible版本信息

[root@ip-172-31-34-153 ~]# ansible --version ansible 2.9.5 config file = /etc/ansible/ansible.cfg configured module search path = [u'/root/.ansible/plugins/modules', u'/usr/share/ansible/plugins/modules'] ansible python module location = /usr/lib/python2.7/site-packages/ansible executable location = /bin/ansible python version = 2.7.5 (default, Oct 30 2018, 23:45:53) [GCC 4.8.5 20150623 (Red Hat 4.8.5-36)] [root@ip-172-31-34-153 ~]#

禁用本地主机严格密钥检查

[root@ip-172-31-34-153 ~]# vi /etc/ssh/ssh_config StrictHostKeyChecking no

生成密钥对并分发公钥到远程主机

[root@ip-172-31-34-153 ~]# ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa Generating public/private rsa key pair. Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: SHA256:Gj5nl42xywRn0/s9hjBeACErGJWjQhfoDuEDT2yjYfE root@ip-172-31-34-153.ap-east-1.compute.internal The key's randomart image is: +---[RSA 2048]----+ | oooo... .. | |++*.oo o. | |*B.E.... . | |o=.. . o | |o o . S = o | | . . o + X o | | + o B * . | | + + o o + | | o o o| +----[SHA256]-----+ [root@ip-172-31-34-153 ~]# cat .ssh/id_rsa.pub ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQC29DSROHgwWlucHoL/B+S/4Rd1KsVEbYLmM4p0+Ptx4NjGooEhrnNjIhpKmPNI5zvGtganSia2A7Vsp5Y+IVOgThRjzptQQzmbEloIqv6SsJRDyrUQIPV9dv3jv5pvbtAN0D5rh1AATPh0FNBtnkvm6HLowjueKdE6pBiq74NTPc5jfDuvwq2S5s4Ztnw9NsTuIlIiC7STCfuDo7NoxRVl+QumD12tW52CPd4ZjA4vg4v7xr/BF/rRxdFuG6+740s2kO1EZNaUOoi99qMLQiScOK+SLw+/tN66EmZC0uMeYlDiZZ1VsLb2MMd11CJDWSZ9SZbd1dHQbXywUbj0tRQF root@ip-172-31-34-153.ap-east-1.compute.internal [root@ip-172-31-34-153 ~]#

分发公钥

[root@ip-172-31-34-153 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@18.163.35.70 /bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub" /bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys root@18.163.35.70's password: Number of key(s) added: 1 Now try logging into the machine, with: "ssh 'root@18.163.35.70'" and check to make sure that only the key(s) you wanted were added. [root@ip-172-31-34-153 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@18.162.148.167 /bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub" /bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys root@18.162.148.167's password: Number of key(s) added: 1 Now try logging into the machine, with: "ssh 'root@18.162.148.167'" and check to make sure that only the key(s) you wanted were added. [root@ip-172-31-34-153 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@18.163.103.104 /bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub" /bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys root@18.163.103.104's password: Number of key(s) added: 1 Now try logging into the machine, with: "ssh 'root@18.163.103.104'" and check to make sure that only the key(s) you wanted were added. [root@ip-172-31-34-153 ~]#

配置Ansible主机清单

[root@ip-172-31-34-153 ~]# mkdir kube-cluster [root@ip-172-31-34-153 ~]# cd kube-cluster/ [root@ip-172-31-34-153 kube-cluster]# vi hosts [masters] master ansible_host=18.163.35.70 ansible_user=root [workers] worker1 ansible_host=18.162.148.167 ansible_user=root worker2 ansible_host=18.163.103.104 ansible_user=root

准备基本环境Playbook配置文件(k8s-01/k8s-02/k8s-03)

[root@ip-172-31-34-153 kube-cluster]# vi kube-dependencies.yaml - hosts: all become: yes tasks: - name: Install yum utils yum: name: yum-utils state: latest - name: Install device-mapper-persistent-data yum: name: device-mapper-persistent-data state: latest - name: Install lvm2 yum: name: lvm2 state: latest - name: Add Docker repo get_url: url: https://download.docker.com/linux/centos/docker-ce.repo dest: /etc/yum.repos.d/docer-ce.repo - name: install Docker yum: name: docker-ce state: latest update_cache: true - name: start Docker service: name: docker state: started enabled: yes - name: disable SELinux command: setenforce 0 - name: disable SELinux on reboot selinux: state: disabled - name: ensure net.bridge.bridge-nf-call-ip6tables is set to 1 sysctl: name: net.bridge.bridge-nf-call-ip6tables value: 1 state: present - name: ensure net.bridge.bridge-nf-call-iptables is set to 1 sysctl: name: net.bridge.bridge-nf-call-iptables value: 1 state: present - name: add Kubernetes' YUM repository yum_repository: name: Kubernetes description: Kubernetes YUM repository baseurl: https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64 gpgkey: https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg gpgcheck: yes - name: install kubelet yum: name: kubelet-1.17.3 state: present update_cache: true - name: install kubeadm yum: name: kubeadm-1.17.3 state: present - name: start kubelet service: name: kubelet enabled: yes state: started - hosts: master become: yes tasks: - name: install kubectl yum: name: kubectl-1.17.3 state: present allow_downgrade: yes

执行

[root@ip-172-31-34-153 kube-cluster]# ansible-playbook -i ./hosts kube-dependencies.yaml PLAY [all] ***************************************************************************************************** TASK [Gathering Facts] ***************************************************************************************** ok: [worker1] ok: [worker2] ok: [master] TASK [Install yum utils] *************************************************************************************** changed: [worker1] changed: [master] changed: [worker2] TASK [Install device-mapper-persistent-data] ******************************************************************* changed: [worker1] changed: [worker2] changed: [master] TASK [Install lvm2] ******************************************************************************************** changed: [worker2] changed: [worker1] changed: [master] TASK [Add Docker repo] ***************************************************************************************** changed: [worker2] changed: [worker1] changed: [master] TASK [install Docker] ****************************************************************************************** changed: [worker1] changed: [worker2] changed: [master] TASK [start Docker] ******************************************************************************************** changed: [worker1] changed: [master] changed: [worker2] TASK [disable SELinux] ***************************************************************************************** changed: [worker2] changed: [worker1] changed: [master] TASK [disable SELinux on reboot] ******************************************************************************* [WARNING]: SELinux state change will take effect next reboot changed: [worker2] changed: [worker1] changed: [master] TASK [ensure net.bridge.bridge-nf-call-ip6tables is set to 1] ************************************************** [WARNING]: The value 1 (type int) in a string field was converted to u'1' (type string). If this does not look like what you expect, quote the entire value to ensure it does not change. changed: [worker2] changed: [worker1] changed: [master] TASK [ensure net.bridge.bridge-nf-call-iptables is set to 1] *************************************************** changed: [worker1] changed: [worker2] changed: [master] TASK [add Kubernetes' YUM repository] ************************************************************************** changed: [worker2] changed: [worker1] changed: [master] TASK [install kubelet] ***************************************************************************************** changed: [worker1] changed: [worker2] changed: [master] TASK [install kubeadm] ***************************************************************************************** changed: [worker1] changed: [worker2] changed: [master] TASK [start kubelet] ******************************************************************************************* changed: [worker2] changed: [worker1] changed: [master] PLAY [master] ************************************************************************************************** TASK [Gathering Facts] ***************************************************************************************** ok: [master] TASK [install kubectl] ***************************************************************************************** ok: [master] PLAY RECAP ***************************************************************************************************** master : ok=17 changed=14 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 worker1 : ok=15 changed=14 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 worker2 : ok=15 changed=14 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 [root@ip-172-31-34-153 kube-cluster]#

准备主节点Palybook配置文件(k8s-01)

[root@ip-172-31-34-153 kube-cluster]# vi master.yaml - hosts: master become: yes tasks: - name: initialize the cluster shell: kubeadm init --pod-network-cidr=10.244.0.0/16 >> cluster_initialized.txt args: chdir: $HOME creates: cluster_initialized.txt - name: create .kube directory become: yes become_user: centos file: path: $HOME/.kube state: directory mode: 0755 - name: copy admin.conf to user's kube config copy: src: /etc/kubernetes/admin.conf dest: /home/centos/.kube/config remote_src: yes owner: centos - name: install Pod network become: yes become_user: centos shell: kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml >> pod_network_setup.txt args: chdir: $HOME creates: pod_network_setup.txt

执行

[root@ip-172-31-34-153 kube-cluster]# ansible-playbook -i ./hosts master.yaml PLAY [master] ************************************************************************************************** TASK [Gathering Facts] ***************************************************************************************** ok: [master] TASK [initialize the cluster] ********************************************************************************** ok: [master] TASK [create .kube directory] ********************************************************************************** [WARNING]: Module remote_tmp /home/centos/.ansible/tmp did not exist and was created with a mode of 0700, this may cause issues when running as another user. To avoid this, create the remote_tmp dir with the correct permissions manually changed: [master] TASK [copy admin.conf to user's kube config] ******************************************************************* changed: [master] TASK [install Pod network] ************************************************************************************* changed: [master] PLAY RECAP ***************************************************************************************************** master : ok=5 changed=3 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 [root@ip-172-31-34-153 kube-cluster]#

使用非特权用户centos验证Kubernetes集群及主节点状态

[centos@k8s-01 ~]$ kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-01 Ready master 155m v1.17.3 [centos@k8s-01 ~]$

准备工作节点Playbook配置文件(k8s-02/k8s-03)

[root@ip-172-31-34-153 kube-cluster]# vi workers.yaml - hosts: master become: yes gather_facts: false tasks: - name: get join command shell: kubeadm token create --print-join-command register: join_command_raw - name: set join command set_fact: join_command: "{{ join_command_raw.stdout_lines[0] }}" - hosts: workers become: yes tasks: - name: join cluster shell: "{{ hostvars['master'].join_command }} --ignore-preflight-errors all >> node_joined.txt" args: chdir: $HOME creates: node_joined.txt

执行

[root@ip-172-31-34-153 kube-cluster]# ansible-playbook -i hosts workers.yaml PLAY [master] ************************************************************************************************** TASK [get join command] **************************************************************************************** changed: [master] TASK [set join command] **************************************************************************************** ok: [master] PLAY [workers] ************************************************************************************************* TASK [Gathering Facts] ***************************************************************************************** ok: [worker2] ok: [worker1] TASK [join cluster] ******************************************************************************************** changed: [worker2] changed: [worker1] PLAY RECAP ***************************************************************************************************** master : ok=2 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 worker1 : ok=2 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 worker2 : ok=2 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 [root@ip-172-31-34-153 kube-cluster]#

验证集群状态

[centos@k8s-01 ~]$ kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-01 Ready master 159m v1.17.3 k8s-02 Ready <none> 41s v1.17.3 k8s-03 Ready <none> 41s v1.17.3 [centos@k8s-01 ~]$

部署容器化应用程序进行测试

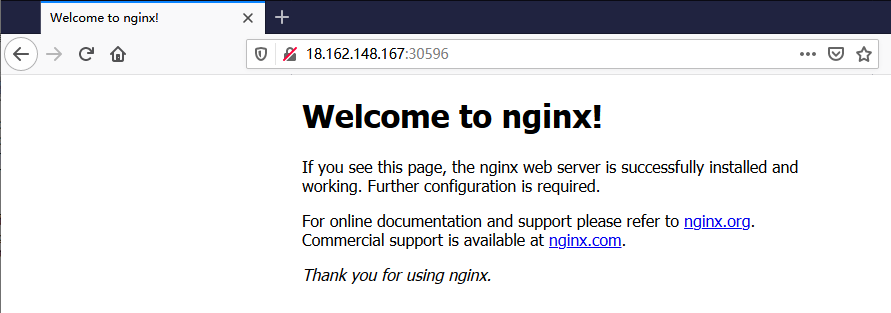

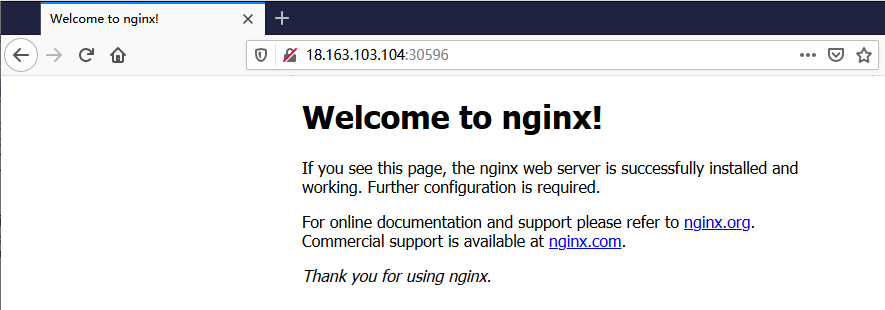

[centos@k8s-01 ~]$ kubectl create deployment nginx --image=nginx deployment.apps/nginx created [centos@k8s-01 ~]$ [centos@k8s-01 ~]$ kubectl expose deploy nginx --port 80 --target-port 80 --type NodePort service/nginx exposed [centos@k8s-01 ~]$ [centos@k8s-01 ~]$ kubectl get services NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 179m nginx NodePort 10.109.120.31 <none> 80:30596/TCP 15s [centos@k8s-01 ~]$

使用浏览器访问

删除已部署的容器化应用

[centos@k8s-01 ~]$ kubectl delete service nginx service "nginx" deleted [centos@k8s-01 ~]$ kubectl get service NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3h4m [centos@k8s-01 ~]$ [centos@k8s-01 ~]$ kubectl delete deployment nginx deployment.apps "nginx" deleted [centos@k8s-01 ~]$ kubectl get deployments No resources found in default namespace. [centos@k8s-01 ~]$

客户端命令行接口配置文件详情(基于PKI体系的服务器及客户端证书验证)

apiVersion: v1 clusters: - cluster: certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUN5RENDQWJDZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRJd01ETXdOekE1TVRNeU1Wb1hEVE13TURNd05UQTVNVE15TVZvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTHl4Ckx2M25ESzZHaDgxN1pjWmpqUVV5em13RlVvdzZhZDV1T1Jabzg2Q0tsNW52RnF3VjRYL2E4OGx2S1grc2xqWDkKSDZGR2Y2bm1uM2JMTnlXWWEreThGcllUMHBQR2x3aG5qWE1WSkJlUW9SS2NiK2hySERPZlNGZ0xsZjQ0TWR1VwpPd3Vmb2VTYnJpL3hoZ0ExMXhqbStmVGJNV3ZkNkZVM0h6ZW9WeEtsdVJNcmJVL0YySHFVN0R1ZEV6dUNQUWFsCk1OOUxiblZJcUtwREp5VzhmODY1V29MUHJlWjhMZkZqMVQvMXl2ZEk1dkJwTFBKc0NZUndLdndSTEhZajAzTHMKRVA5QlpuRkhNRDYwV3RuZXc4bkdaRjJkWTdIRHZRa1V2M2hoemtVMXRLa3BncWhvM2tCUytoUUNwUEpLMzZLMgplOG9aT2NrTDJsYjJzTmpBck84Q0F3RUFBYU1qTUNFd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0RRWUpLb1pJaHZjTkFRRUxCUUFEZ2dFQkFJTnFSMXYwUGVPKy9TR05OcXN6S2MzNHk5RGkKVjA5dFZoemRRNEV6aGtoM0ZOS0RRMDZ0VTNPcUw2dzREano2SnlwSW9IaGVsTXVxVmJtY0V5WE9WNzYwZ0hPRQpJaWJ0ZlJhcVdPMVc2RXE0NklQbjEwZkFWNzRwNjhVcWdQdjkra0RSb2grYWhobFJFTGJJdTJNcjAzNHBjcWduClZSK01lWGZ6Q0VvalF3dzd0ZVJGNnpMTCtQa0duNHI4L24rNFNGUjJIK2lDVCtiVzNxZWdCYi9MWWwyTmNMTHMKVDEvcnROZnFTaEIyV2dYbXZKUkl2YXRIWWtUdUZCN1IwZ0pkQUJJWXdkSGlpbVN4TkdDK05WRzIzL3BDdmRKUApFcjFPd2xuWFBMSStiOHpXNDNEanVjd0pPdTY0alZEVmduNUpJUDZqNjRuYnN2eC9VSkIvOUZNK0lVST0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo= server: https://172.31.43.3:6443 name: kubernetes contexts: - context: cluster: kubernetes user: kubernetes-admin name: kubernetes-admin@kubernetes current-context: kubernetes-admin@kubernetes kind: Config preferences: {} users: - name: kubernetes-admin user: client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUM4akNDQWRxZ0F3SUJBZ0lJY0trbWVQMXNaZnd3RFFZSktvWklodmNOQVFFTEJRQXdGVEVUTUJFR0ExVUUKQXhNS2EzVmlaWEp1WlhSbGN6QWVGdzB5TURBek1EY3dPVEV6TWpGYUZ3MHlNVEF6TURjd09URXpNak5hTURReApGekFWQmdOVkJBb1REbk41YzNSbGJUcHRZWE4wWlhKek1Sa3dGd1lEVlFRREV4QnJkV0psY201bGRHVnpMV0ZrCmJXbHVNSUlCSWpBTkJna3Foa2lHOXcwQkFRRUZBQU9DQVE4QU1JSUJDZ0tDQVFFQXhTbFZmb1IyVTQ2UGdCbzAKR2kyL2NROFEzVldCcVpaNzRwM3cxZ1pDS2dzaUhya3RGWTdrTm52Y3hLNXVPRjZIN1YxS0JrYmRUNXZvVlZ2YQpFRlY3TU5RZUZ6RDEzWkFKK2dOVFN5RFUrY21qT2xnQW1xMktZeHdKbTNBNUdnNFRSbVpUN01mS3FxMVc4V2lxClZlWkY1cnViUkdpb3Z0WWR5L3BHUEs1b0dJaWtpd2w0QU9SMXFGRG80ejR3SmtyMEd5OUxSSzhNZ0RkeEhrSk0KQklrZ2QrbnFpODBGZUpLM2JzWTBjUG9LYk9QbEx4Vm9XQW5iUWEyNjVqYXBQbitNdEpKWkdRelFwYXhranE5RApvek1Pa3pnV0dQMFZKcC9CUXFINGI5NTFXaUFpNTMwbVlvVTVRUDJwaFR6amtUbG1PQlErd3hoZDNKaU9TdjUwCkVmdkdHUUlEQVFBQm95Y3dKVEFPQmdOVkhROEJBZjhFQkFNQ0JhQXdFd1lEVlIwbEJBd3dDZ1lJS3dZQkJRVUgKQXdJd0RRWUpLb1pJaHZjTkFRRUxCUUFEZ2dFQkFIdSt5MjRxa2F0Y21rZkJYRUtrUXg1SUdvNm9Ud0FIcnRqdQo5SUw1MTZ4cVZPMlUvblAwM3hqbHBVdkFSR1dSU3czRjZsanNkUTM5VS9DMGQ2SVNGT0t4K2VkMFE5MVptYW03CnNib0liaXJSeDdVa3ErdThoS3dRK1Zad1Z0akdLUWYwclB2STFkb2drcHJldkF2Myt3OUdld3p5Y0NqemxIbE0KU09pdFdYYkdpdzBoWmk3a25lYmdMQVEvdkVVSlFrNFNVK21oMTJIaVNZY0R2WlJOZkJOUzNONnpPMnZXUGFrcwpFMVIvZ1BBTmlMMllTSXpnQVAwSyszTzJGVzc1SndLa3dXUlNEM1NIZWQxbTZIYlVGcTlBUEdWOXB1eHJTZXJoCkF0T2QzbTdIUnRCS3Q1L29ZaUNva1NBRjZIR1hJcCtEYTFBMFZQRkU0YlVkQjl5MUlHWT0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo= client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFcEFJQkFBS0NBUUVBeFNsVmZvUjJVNDZQZ0JvMEdpMi9jUThRM1ZXQnFaWjc0cDN3MWdaQ0tnc2lIcmt0CkZZN2tObnZjeEs1dU9GNkg3VjFLQmtiZFQ1dm9WVnZhRUZWN01OUWVGekQxM1pBSitnTlRTeURVK2Ntak9sZ0EKbXEyS1l4d0ptM0E1R2c0VFJtWlQ3TWZLcXExVzhXaXFWZVpGNXJ1YlJHaW92dFlkeS9wR1BLNW9HSWlraXdsNApBT1IxcUZEbzR6NHdKa3IwR3k5TFJLOE1nRGR4SGtKTUJJa2dkK25xaTgwRmVKSzNic1kwY1BvS2JPUGxMeFZvCldBbmJRYTI2NWphcFBuK010SkpaR1F6UXBheGtqcTlEb3pNT2t6Z1dHUDBWSnAvQlFxSDRiOTUxV2lBaTUzMG0KWW9VNVFQMnBoVHpqa1RsbU9CUSt3eGhkM0ppT1N2NTBFZnZHR1FJREFRQUJBb0lCQVFDQ2s1bDNyU3JncyszKwpIVnljYWVmOGJNbnlqSXJQVWtiQ0UzQkpqdU9MRE15UUpIdmpaenRsaWlyd1o4Vy90M3Uyaks1VjhlRG90SXp1CjIySlVwd2hya2xCTGM3V2lBNTlYNFpQc2tkWDdpTHQrRElKNTdxMVVibUUrZk5pVWxQWFhEalpPL3hNT2JyYkMKTTF0OGdJR1RDblVPblhJRTBiSHlRZEw2cFZkenh3Ri9EeFNNTy9zOGxLOEh3K0RzT0xxU3FPbHoyOUpuYk9CeAp1aEMzK3VMalc4Rmpsblh6K25JQWRaWFZoRkp0dG43a1dkak1jZXkyTGZCc1NZbGZlWlhZaTRGTE8xbmNPWGpuCkYwLzNhU2g0UmtPeXZvZDZRSEVxTmFnS0ZPOUZqd29hQzRmWkxLQjBrTG16UlZYa1BiR2lDRXB1N1ozSEw0c3UKaFRaYTNUekJBb0dCQU8zMXlBWDVtYTR2U3FlK2V5eEx2L201WEhtb2QweDhXNUFkcU51ZzNuRjdUSE4zMXppbQpmYVBwTjd4R2lwcXNwMVlGQzBheC9FZDNJYW1RcWVSRlRtTHgrRmttb3NNSThBbUV2U0EvL0JTVWVhYTUzeWtwCkt1NXEzNFBWWW5OSXZpcWpTM1ZITERGckw5MlUzNnVBTk9uMTJwZUw3ek1kOXVOT0srNlV3L20xQW9HQkFOUWIKd0g0RWRUbVAwS2V5V0hmYlBheFhxSVJqV2xLeFhHTU5JcnRVZWNLQ0NadWFTNnE5TFYxWk5KZkMyamN3TFRKMApDMVB2RkNjWjAwRUFScGlkS2lYL0ZaQzloRHZ6TkpsUnRseGs0aGVZVUVoa0lQL1RtcVUvTWZhSEhBREhlbDNCCkNPL1BuUnU5Y3g0NmwxZjBOcm5XRVJoa2J5TTJ4Mzc1ek5xb0tJbFZBb0dBUzhxKy9QZzFOTCtuWGFwVC9SWGIKZmFUR2laRlkvaW1WMkY4NkMwby96NUZnRmw4VFU5M2pvck9EcHhvb3gzODZoVEZ5R0FCVXhFWnptRmlWWkRtVwo3L2oyQ3g4OU5EWENqcVdTdjVUaHE0Um5BdTJzNEtWV0lUNDFGdjUrTHczNlZBWlM0SFhjNDVpcVZEODR4cDA5ClBVK3JZaDJXQUlnSXZQbUhFS1NkandrQ2dZQm53dHU3eWZwK21qZjhrV1p0MjdhajVJM3ZsWnJOOFMyODF1UXkKdC9TSWpveWNyakp0NS9XVlFOcFZrMkNrdHRDbGFkZFF6QmdUdUxKN2plTDdMWWM4NXpocGdneDZOMU4zM1YxVQpmWldNN1ZuNHorTEV3NE5YYXo3SjF2Wi8reFdGWDdVN2UxamtCUjJYb0JvQlVOcWt0bS9PZXZOVFNxejFGTVorCkFOMHpzUUtCZ1FDaDROSlEvVjhhc3prOURnZ2F5bnZ1Z2JWWVg1R0lFNGRSRng3Z3dXek5BckI0V1pUODVHeDgKSzByN3BLdTJsYmh2OFE1UU9GdFFhS0JwcCtjb1g2a3cvbTJZdWdYeVdiREpScEY4ODJXbkQzYWhvbW10WTlXZgpOWmJkeGRXNk8xZ1dURTg1ODV3YW5uOWFZR3g5Q21xNDJ4Sk9SaURPakFZWWEyR3phTHI2SHc9PQotLS0tLUVORCBSU0EgUFJJVkFURSBLRVktLS0tLQo=

命令行工具kubectl基本命令参数

[centos@k8s-01 ~]$ kubectl kubectl controls the Kubernetes cluster manager. Find more information at: https://kubernetes.io/docs/reference/kubectl/overview/ Basic Commands (Beginner): create Create a resource from a file or from stdin. expose Take a replication controller, service, deployment or pod and expose it as a new Kubernetes Service run Run a particular image on the cluster set Set specific features on objects Basic Commands (Intermediate): explain Documentation of resources get Display one or many resources edit Edit a resource on the server delete Delete resources by filenames, stdin, resources and names, or by resources and label selector Deploy Commands: rollout Manage the rollout of a resource scale Set a new size for a Deployment, ReplicaSet or Replication Controller autoscale Auto-scale a Deployment, ReplicaSet, or ReplicationController Cluster Management Commands: certificate Modify certificate resources. cluster-info Display cluster info top Display Resource (CPU/Memory/Storage) usage. cordon Mark node as unschedulable uncordon Mark node as schedulable drain Drain node in preparation for maintenance taint Update the taints on one or more nodes Troubleshooting and Debugging Commands: describe Show details of a specific resource or group of resources logs Print the logs for a container in a pod attach Attach to a running container exec Execute a command in a container port-forward Forward one or more local ports to a pod proxy Run a proxy to the Kubernetes API server cp Copy files and directories to and from containers. auth Inspect authorization Advanced Commands: diff Diff live version against would-be applied version apply Apply a configuration to a resource by filename or stdin patch Update field(s) of a resource using strategic merge patch replace Replace a resource by filename or stdin wait Experimental: Wait for a specific condition on one or many resources. convert Convert config files between different API versions kustomize Build a kustomization target from a directory or a remote url. Settings Commands: label Update the labels on a resource annotate Update the annotations on a resource completion Output shell completion code for the specified shell (bash or zsh) Other Commands: api-resources Print the supported API resources on the server api-versions Print the supported API versions on the server, in the form of "group/version" config Modify kubeconfig files plugin Provides utilities for interacting with plugins. version Print the client and server version information Usage: kubectl [flags] [options] Use "kubectl <command> --help" for more information about a given command. Use "kubectl options" for a list of global command-line options (applies to all commands). [centos@k8s-01 ~]$

内容引用

https://www.digitalocean.com/community/tutorials/how-to-create-a-kubernetes-cluster-using-kubeadm-on-centos-7

主机列表

DC 18.163.111.34 NPS 18.163.35.186 RRAS 18.162.114.236 PC 18.163.117.102

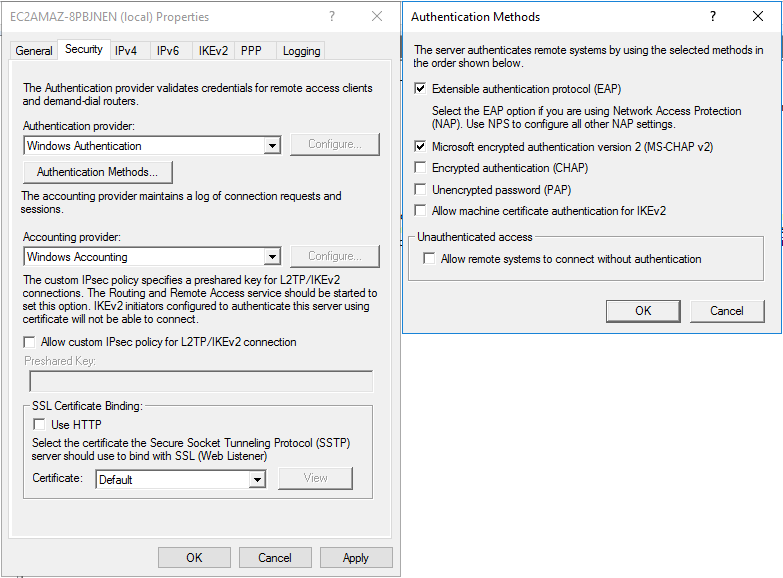

单机环境下RRAS(VPN)服务属性的安全标签页面

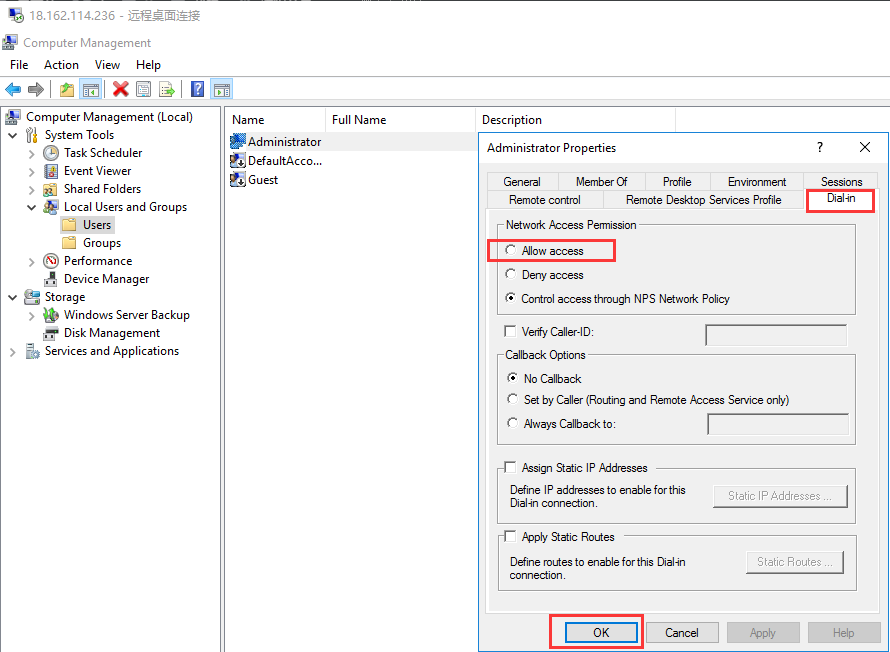

将本地用户Administrator的拨入权限设置为允许

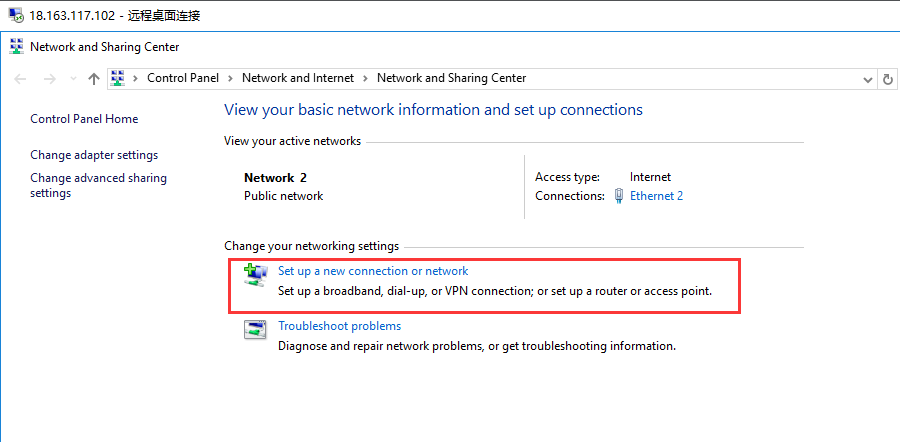

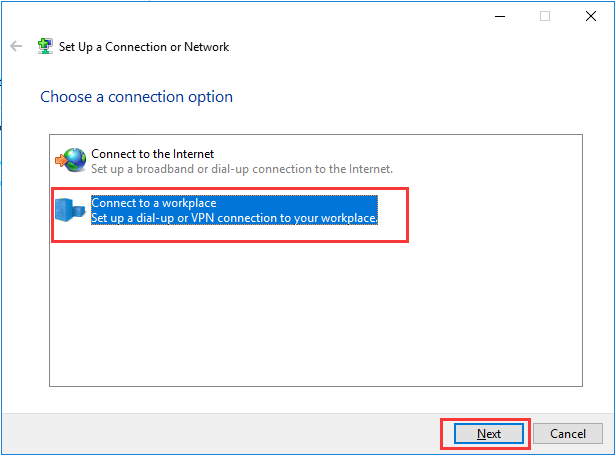

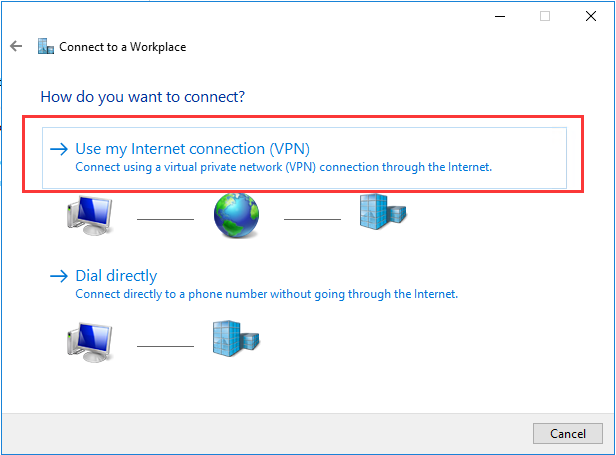

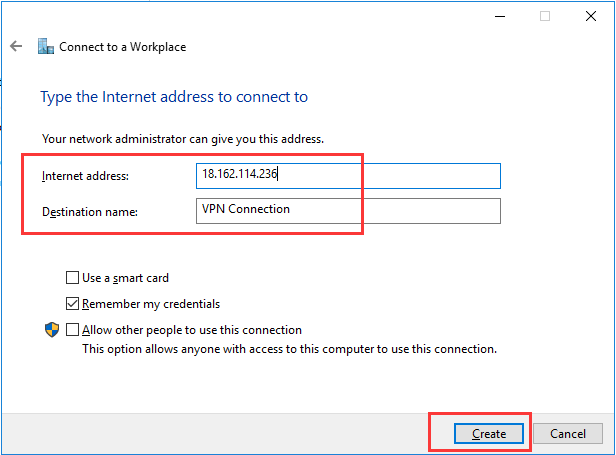

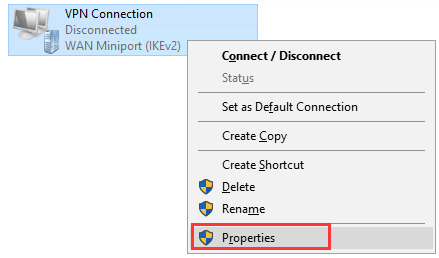

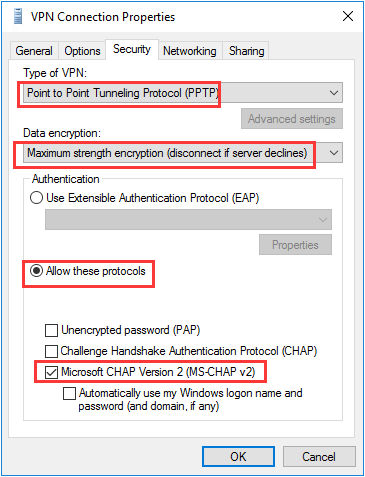

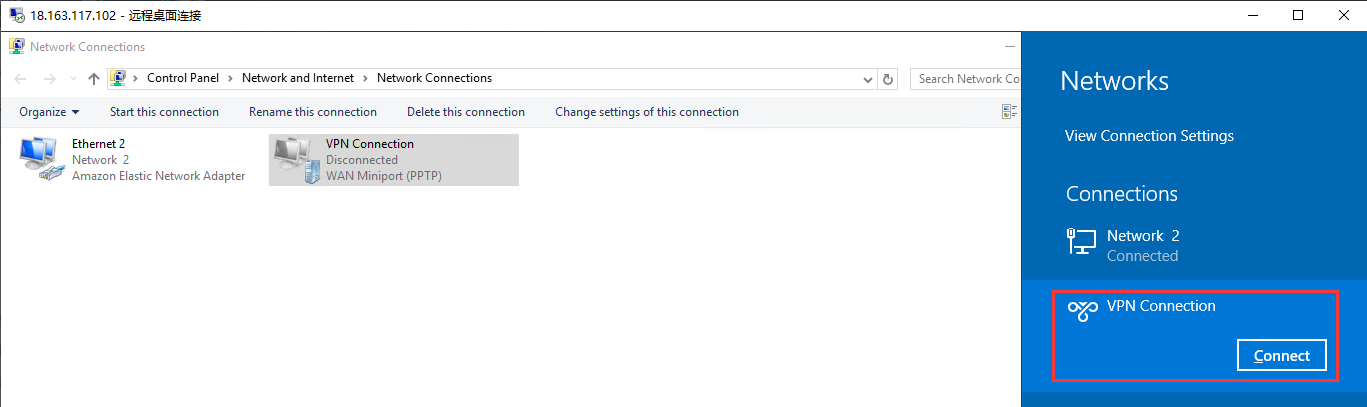

在客户机PC上新建拨号VPN连接

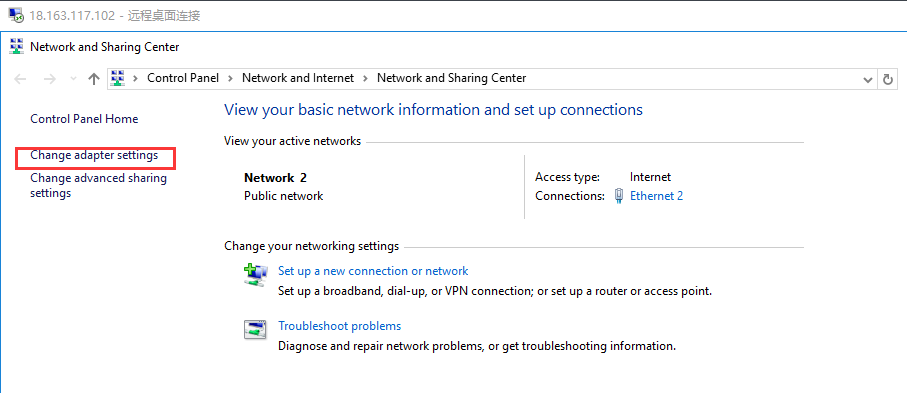

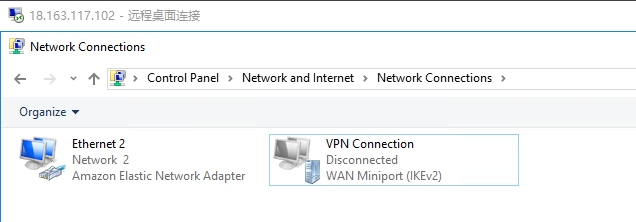

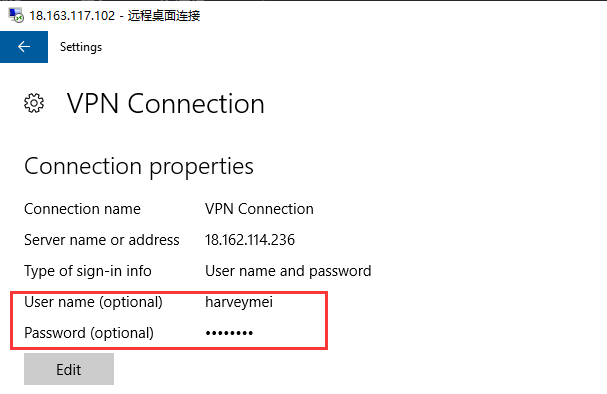

查看VPN拨号连接属性配置

修改安全标签页设置

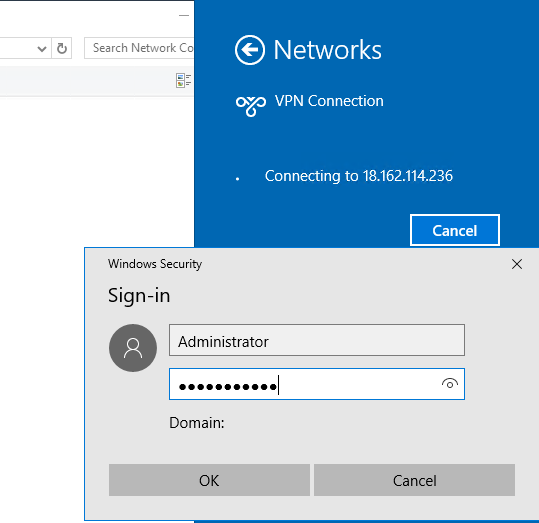

使用RRAS主机用户Administrator进行拨号连接

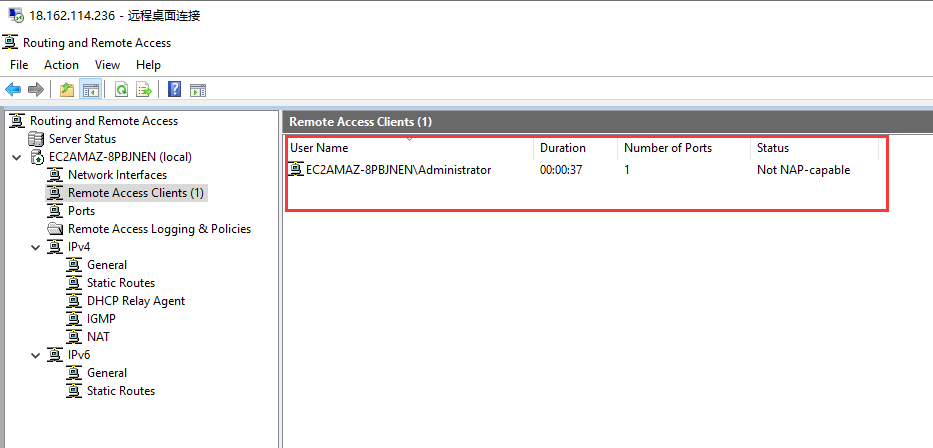

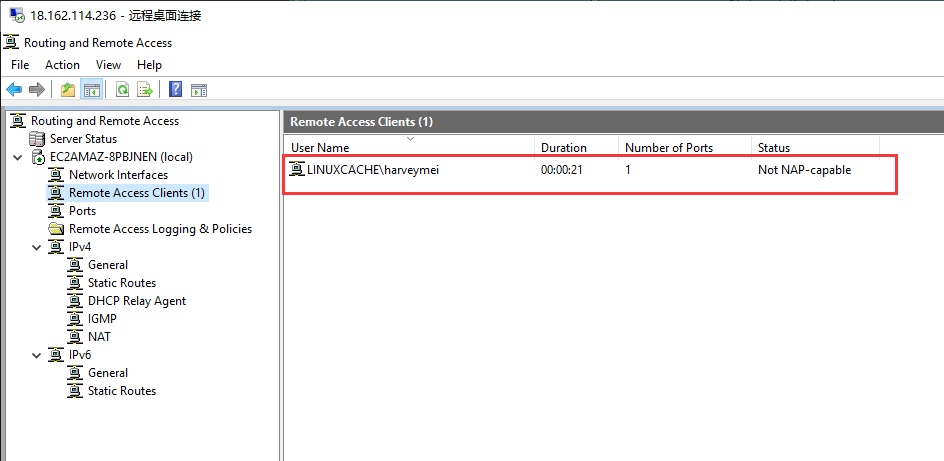

查看已建立连接的VPN会话信息(本地用户)

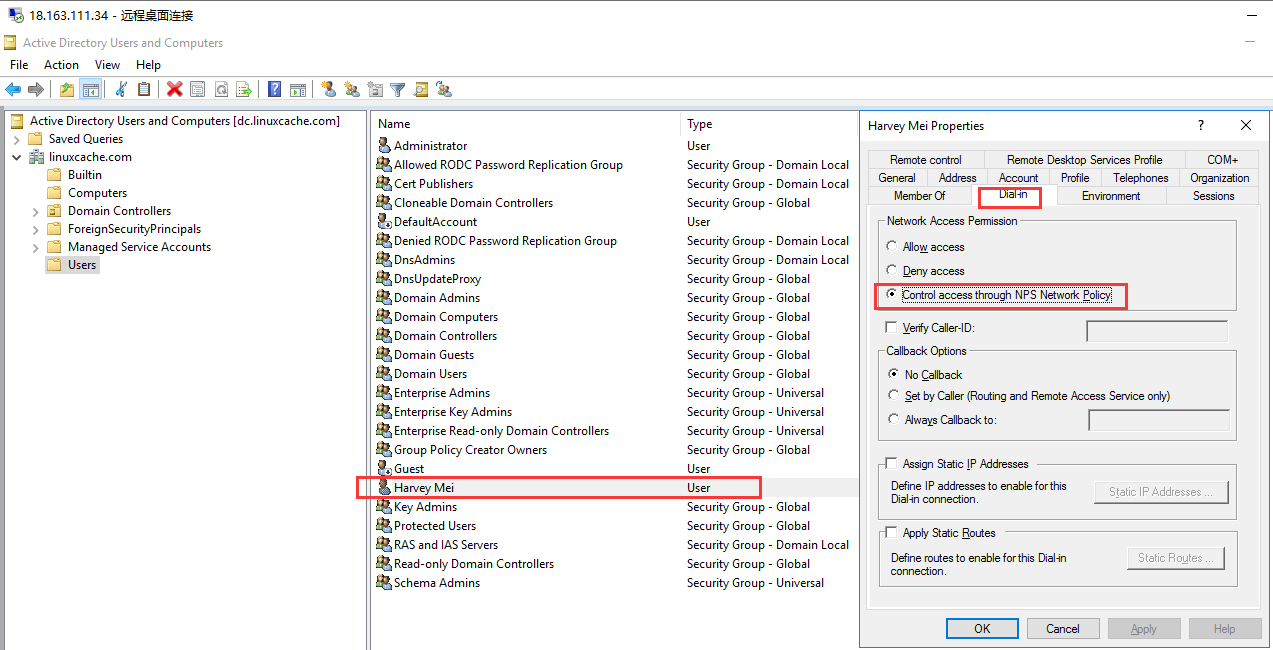

在活动目录中新建一个账户并设置拨入的网络接入权限为通过NPS网络策略控制

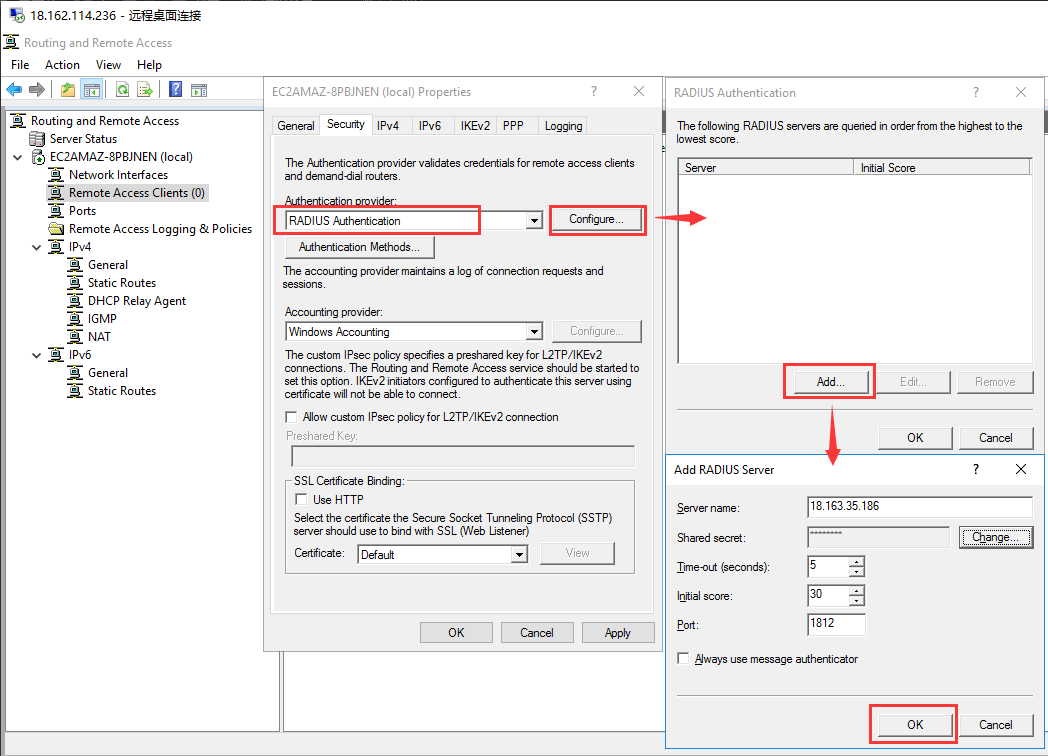

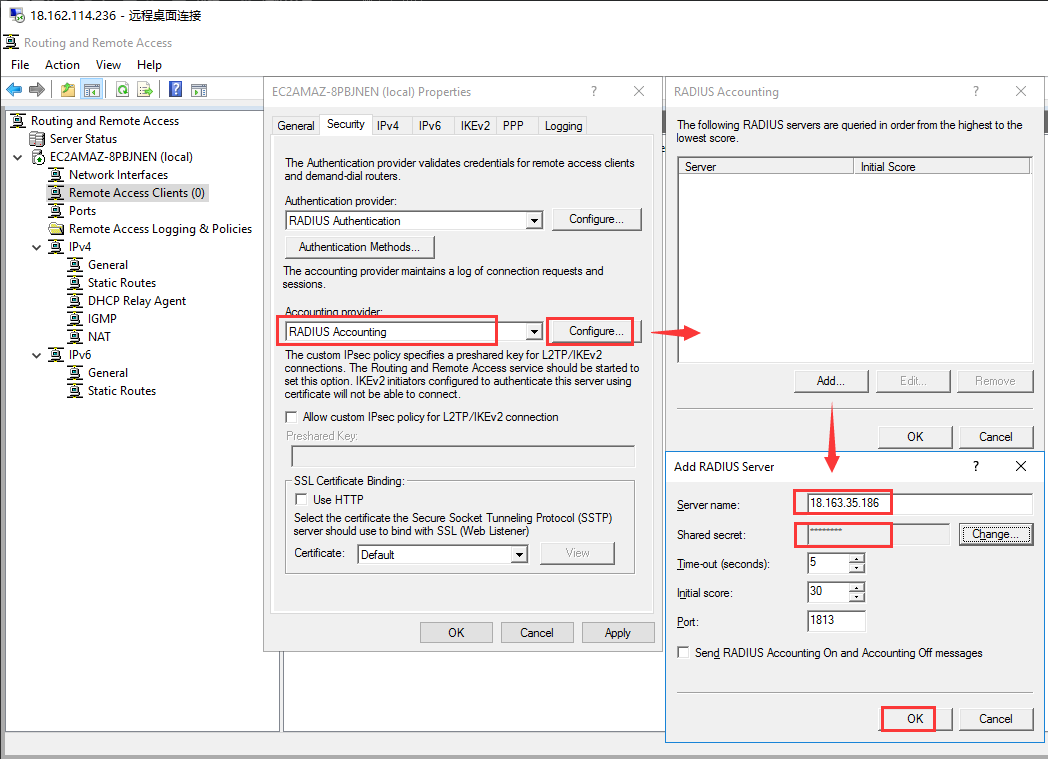

修改RRAS主机的安全标签页设置

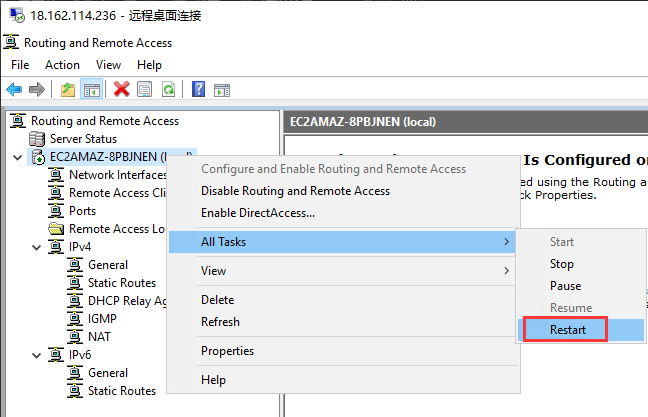

重启RRAS服务

修改客户机PC的VPN连接账户为活动目录中新增用户账户

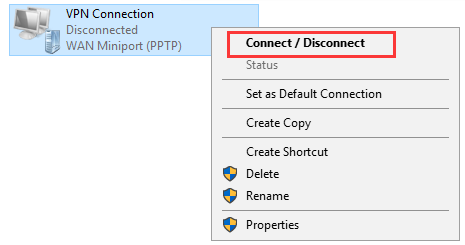

进行拨号连接

查看已建立连接的VPN会话信息(域用户)

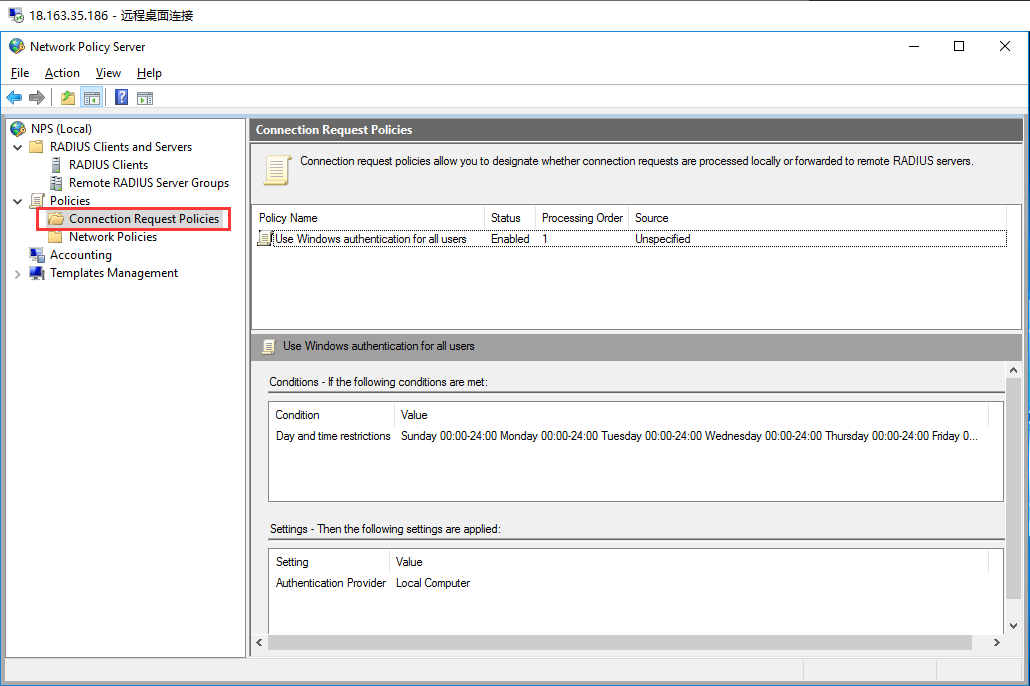

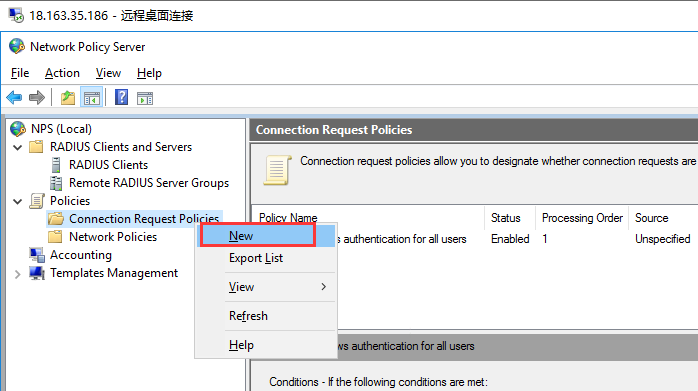

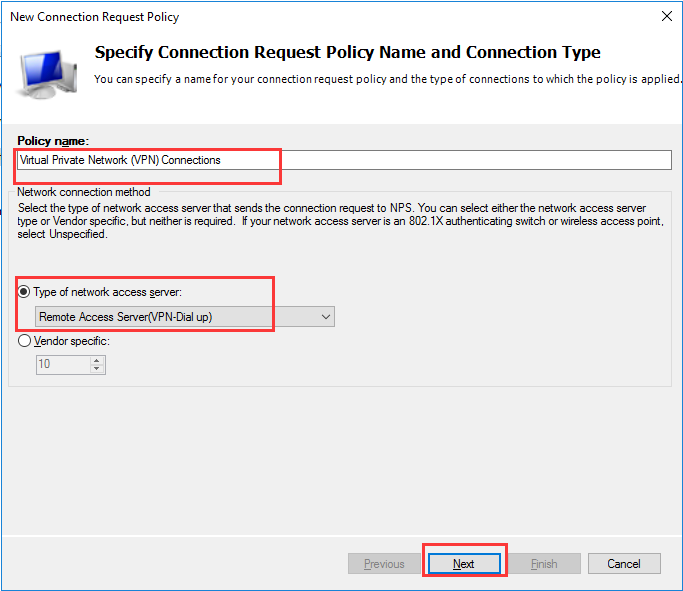

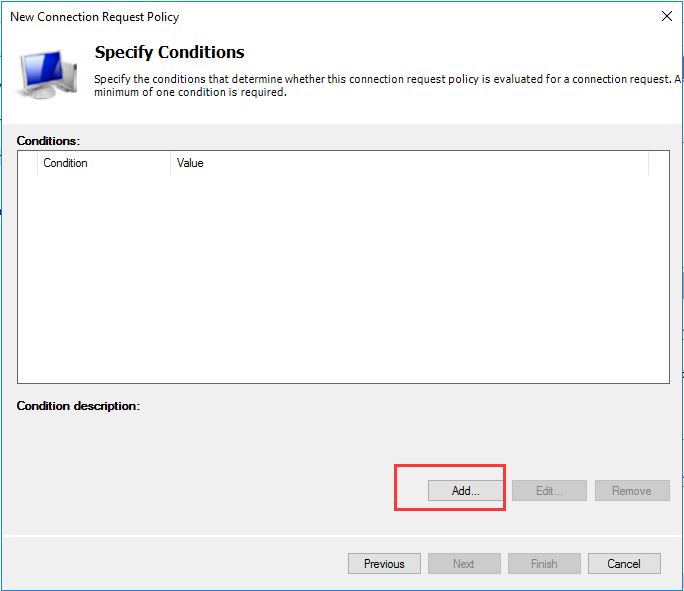

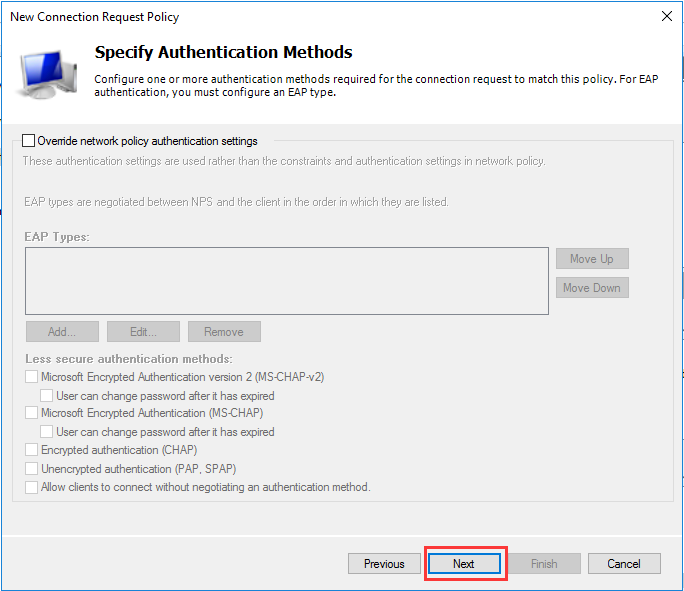

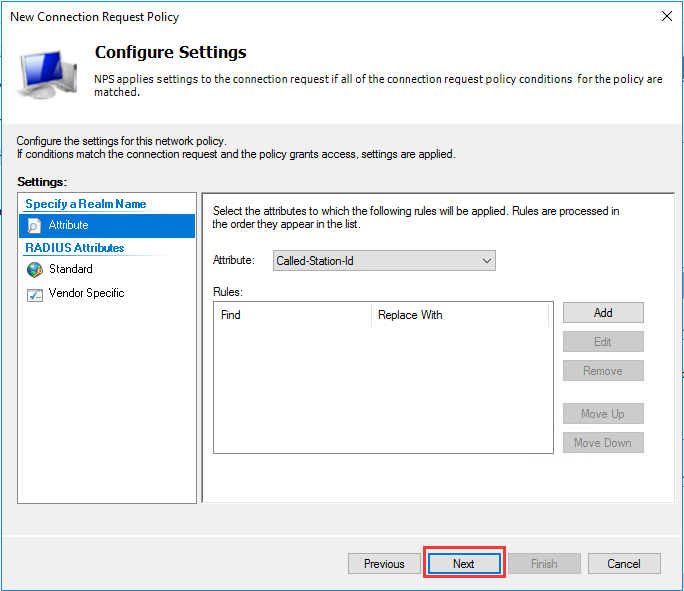

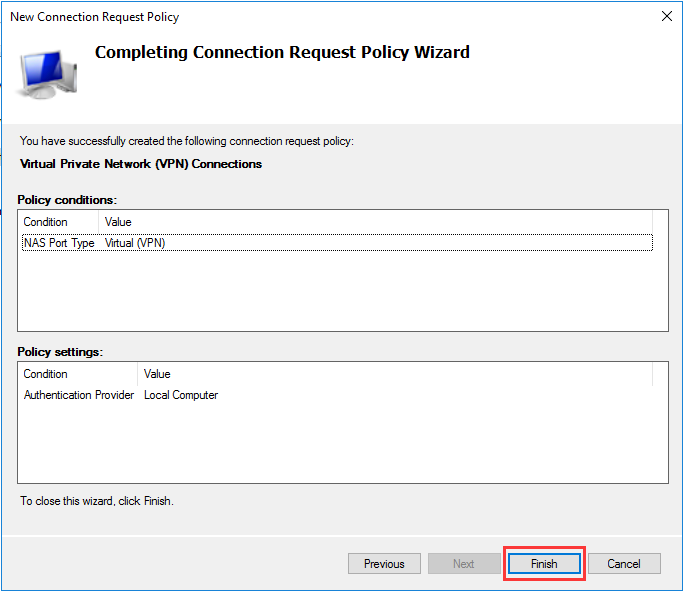

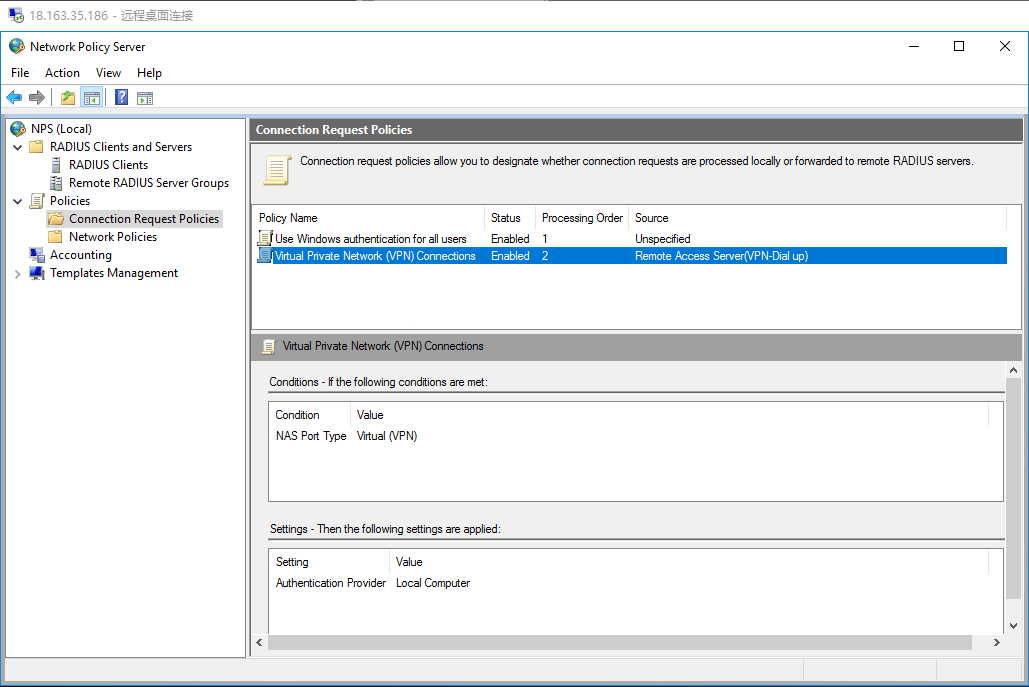

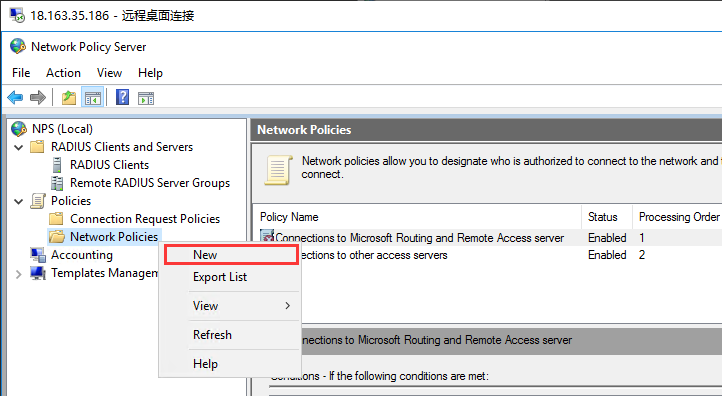

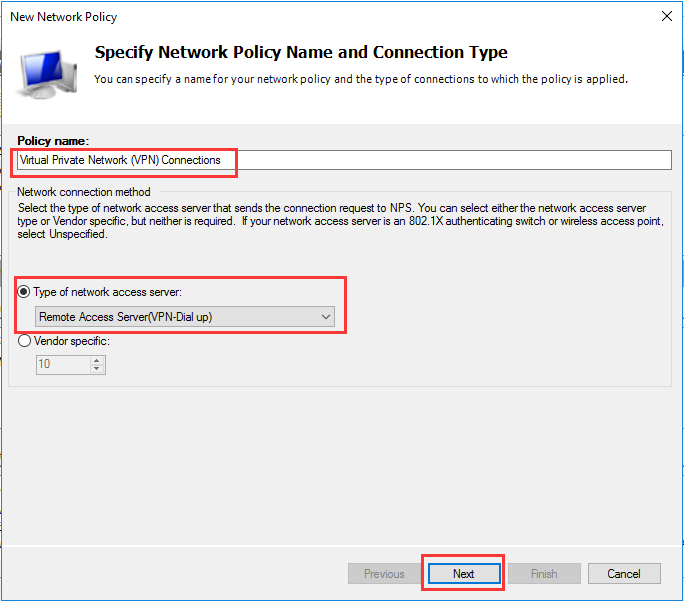

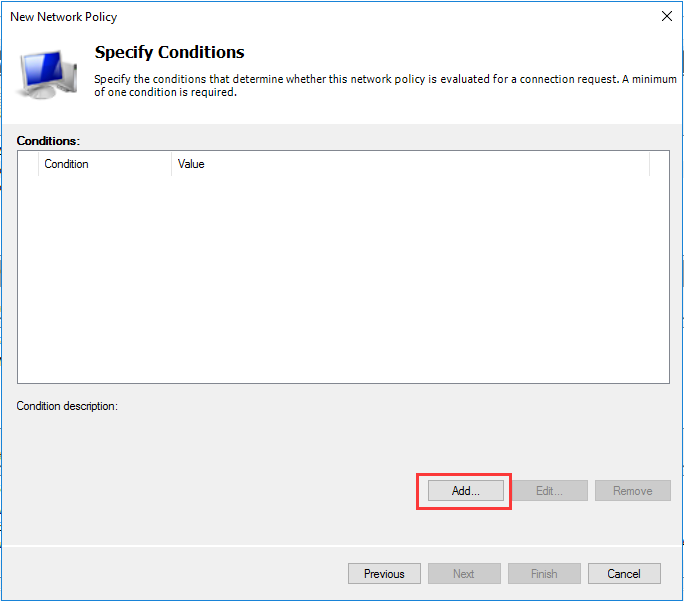

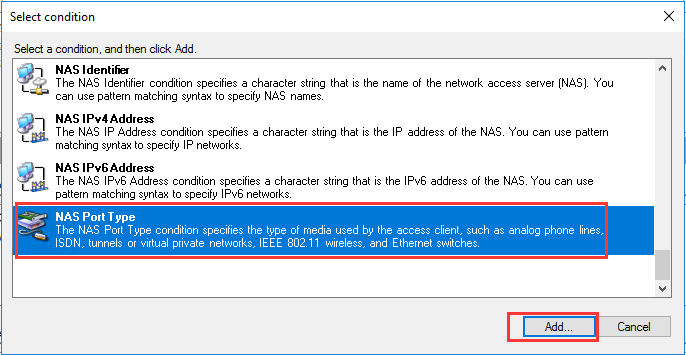

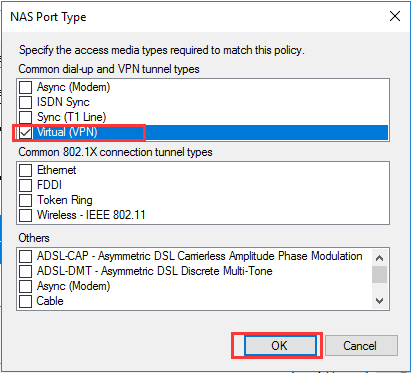

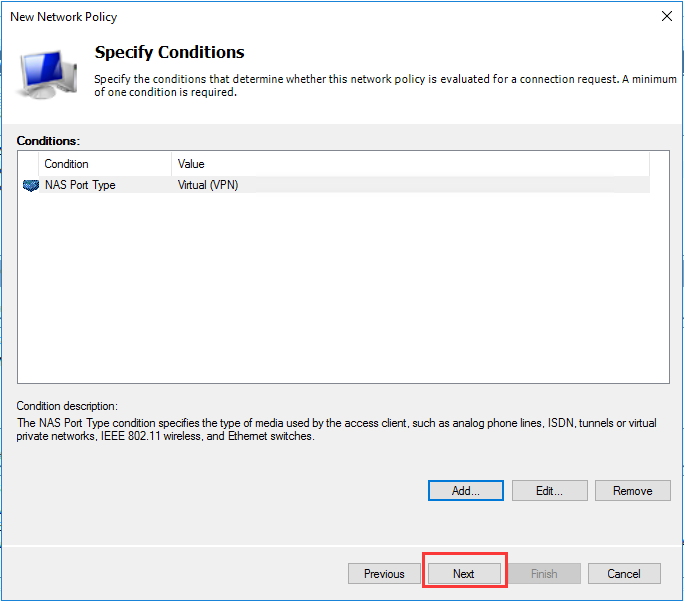

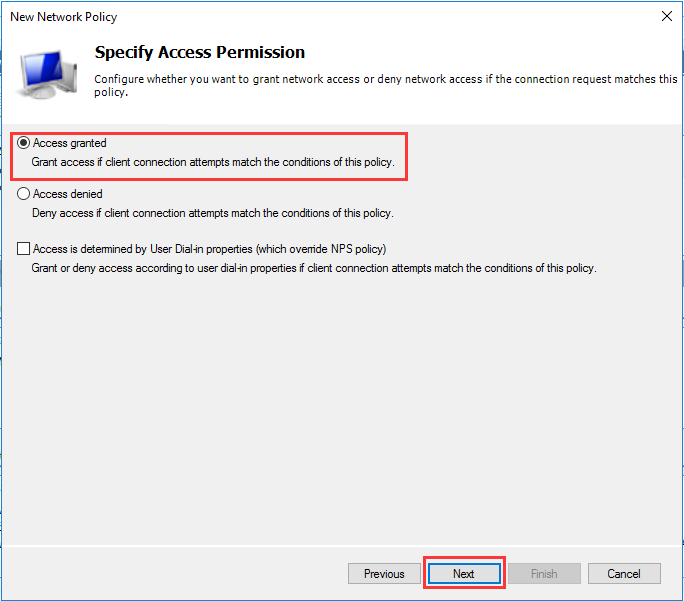

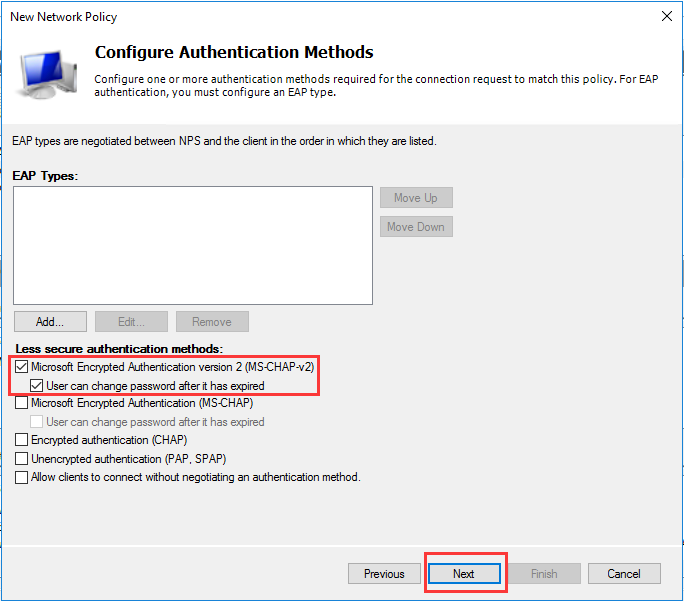

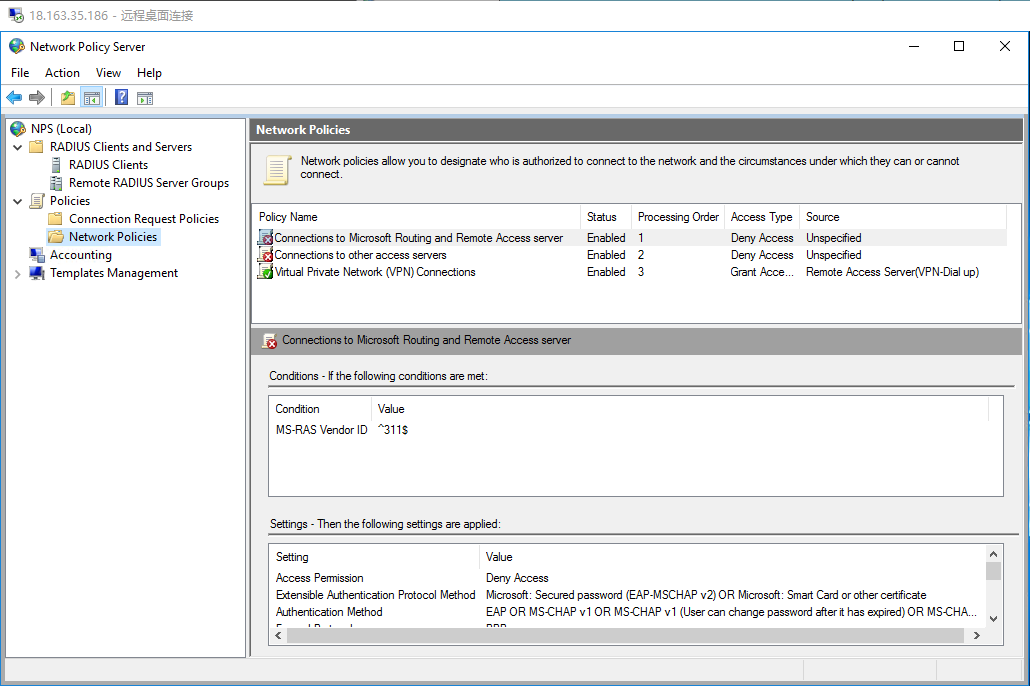

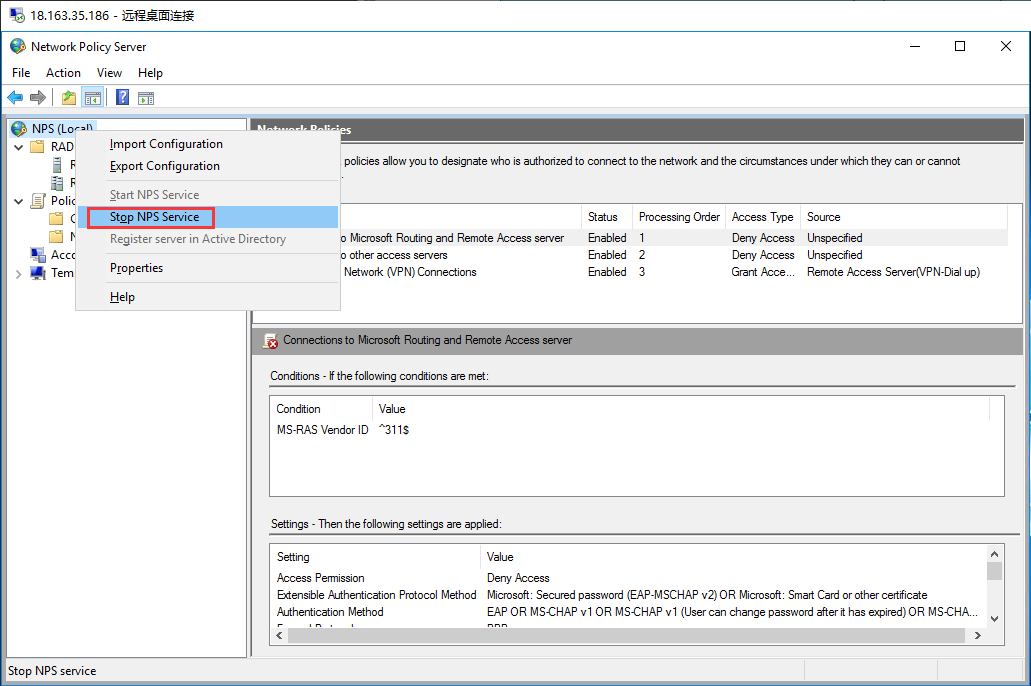

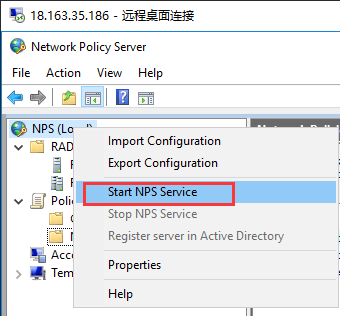

Windows 2016 NPS RADIUS服务配置

主机列表

DC 18.163.111.34 NPS 18.163.35.186 RRAS 18.162.114.236 PC 18.163.117.102

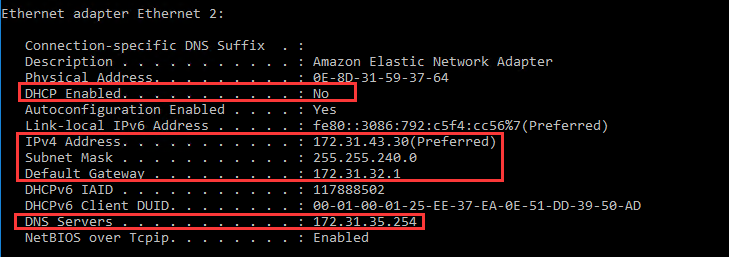

修改主机IP地址信息为静态IP并指向DNS到AD DC服务器

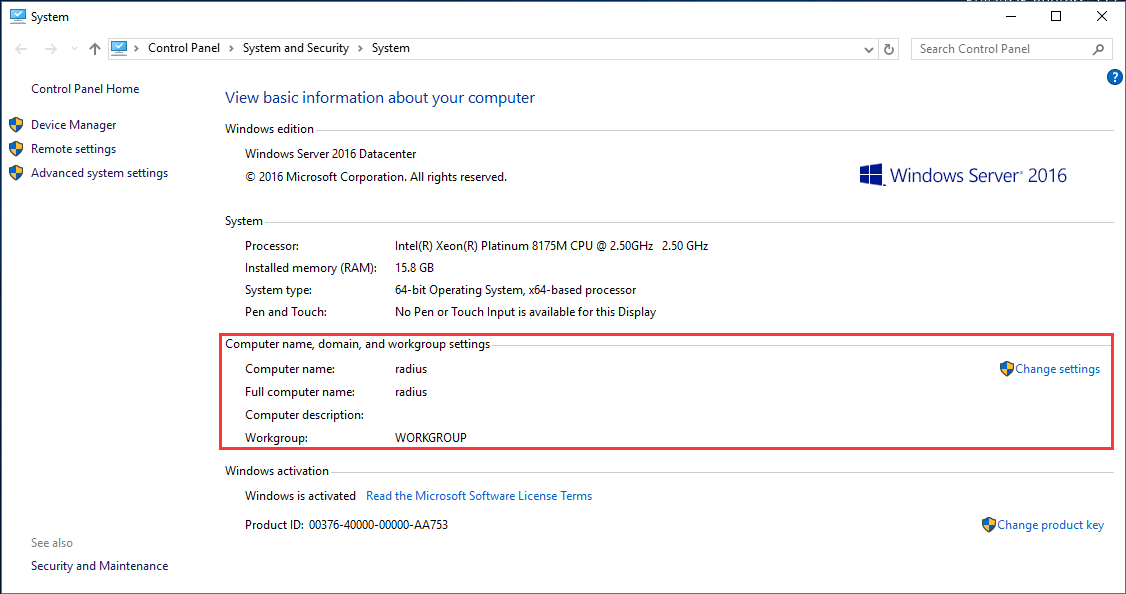

修改主机名

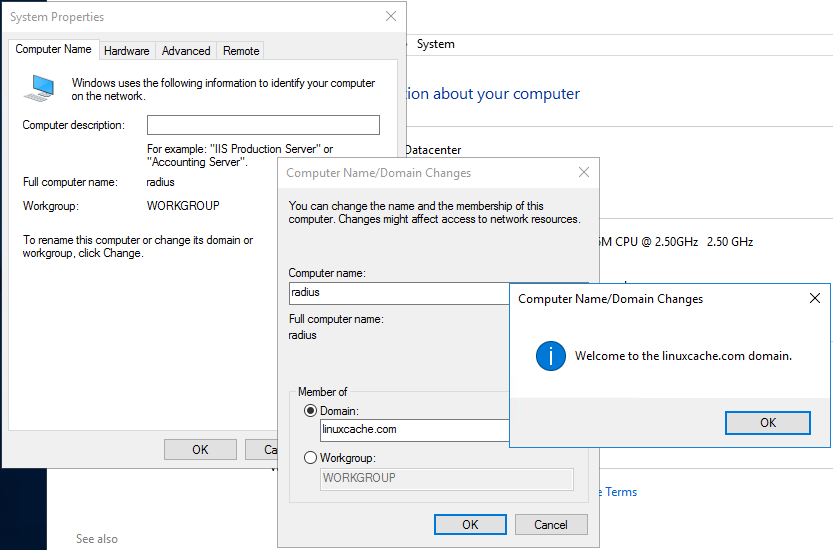

将NPS主机加入域

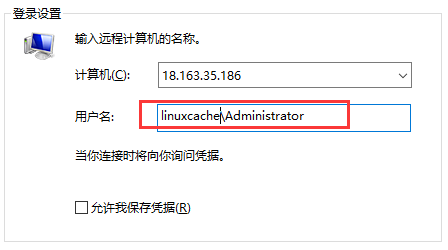

重启后使用域管理员账户登录NPS主机

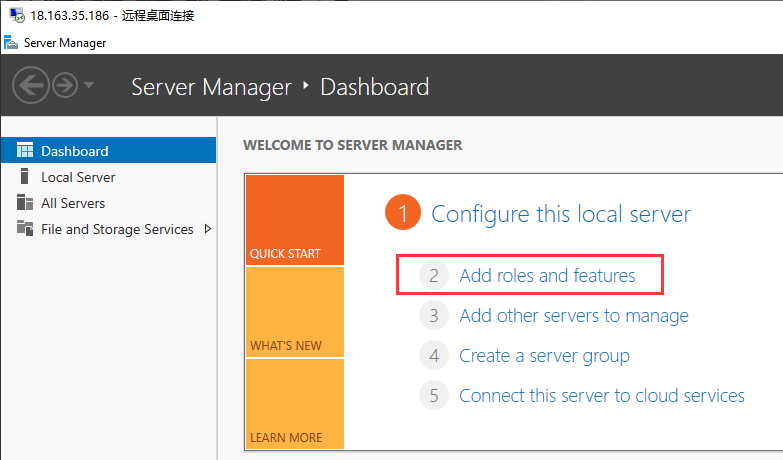

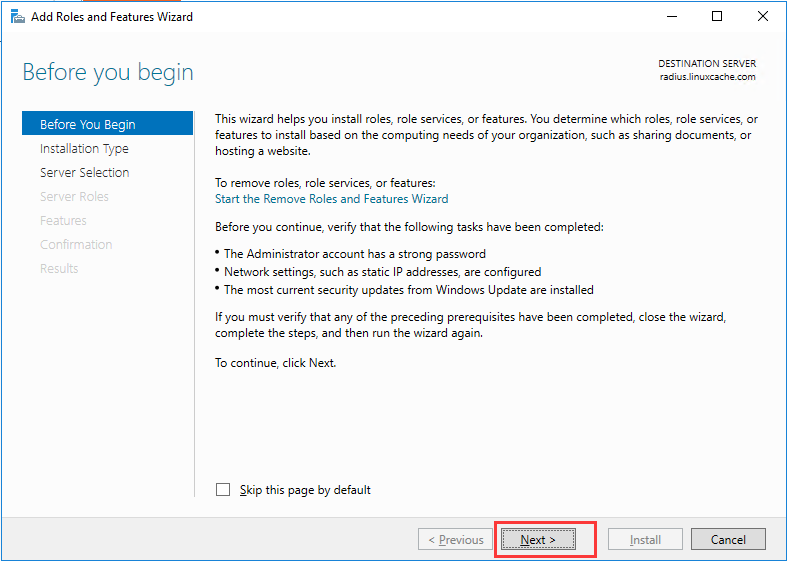

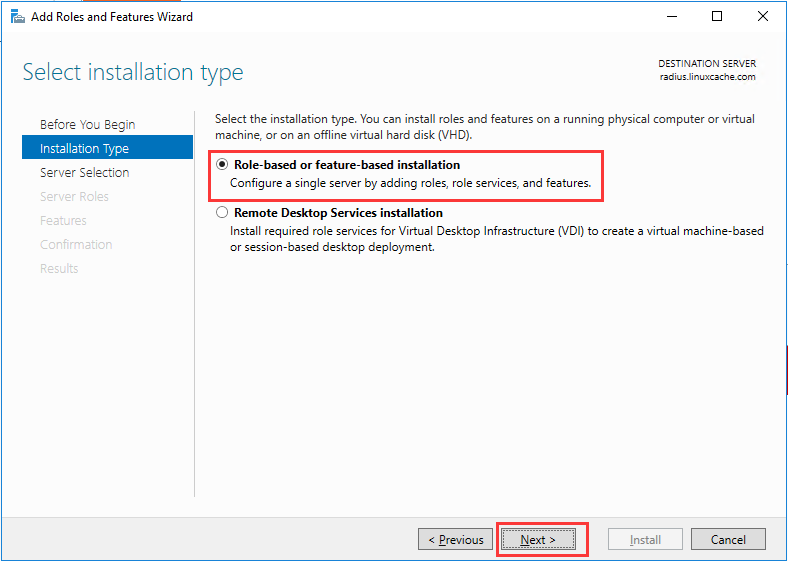

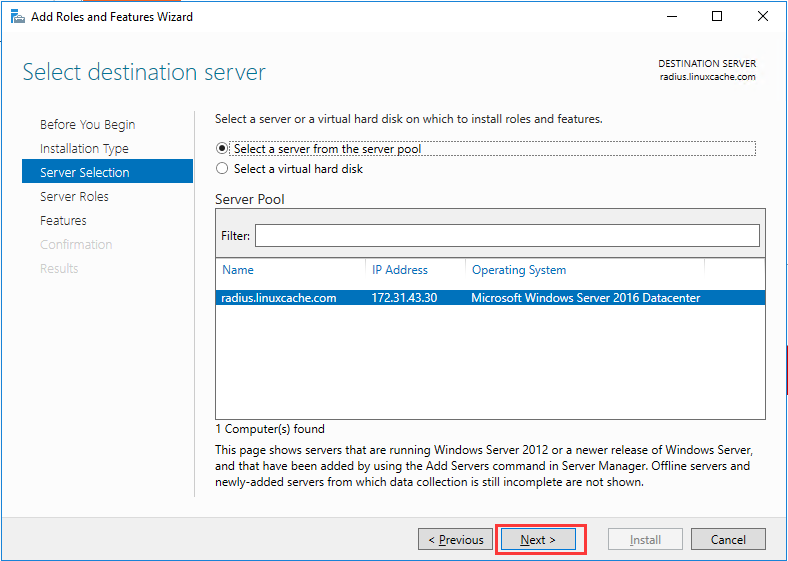

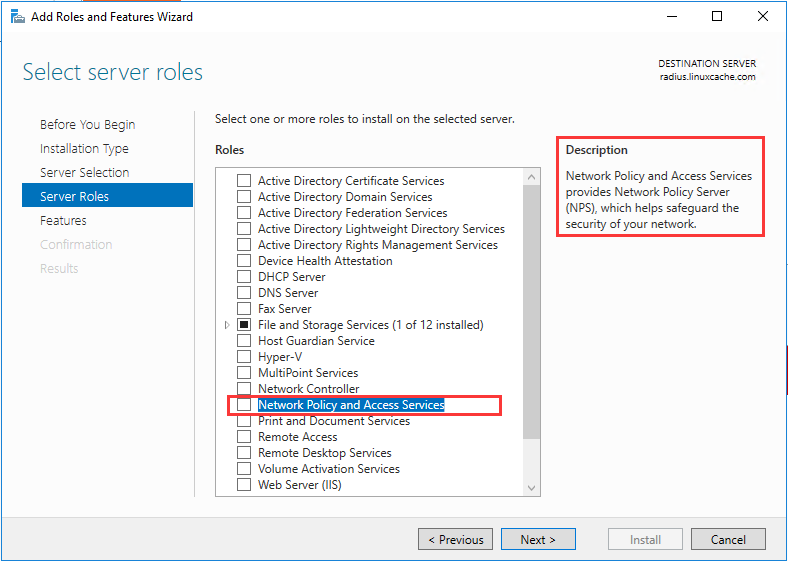

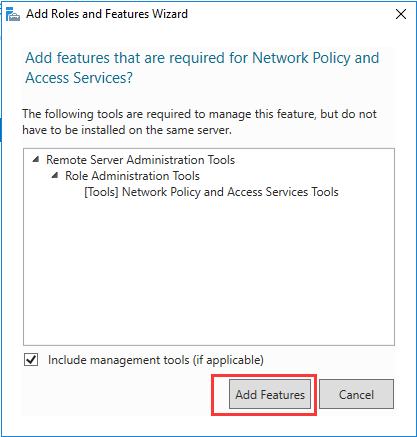

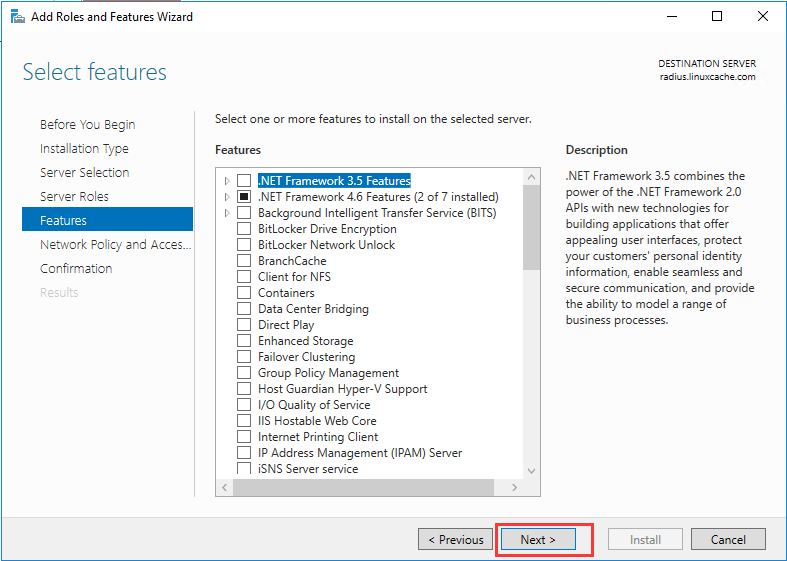

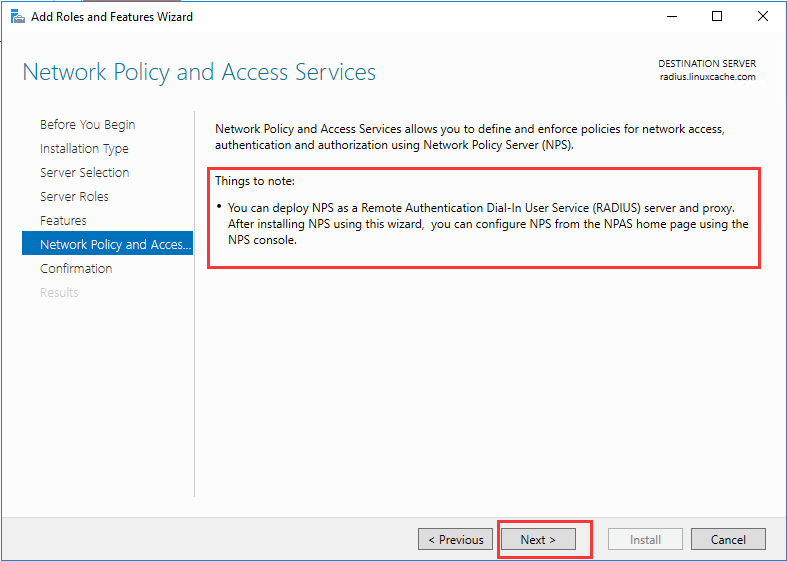

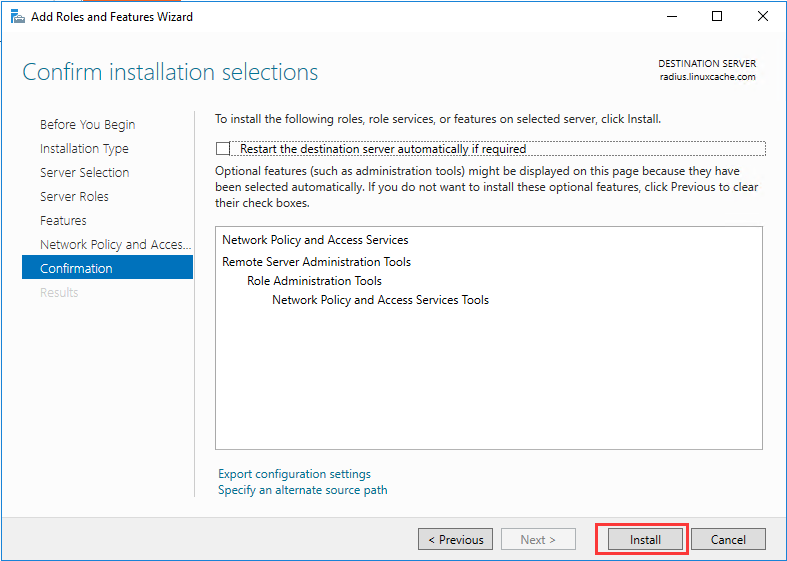

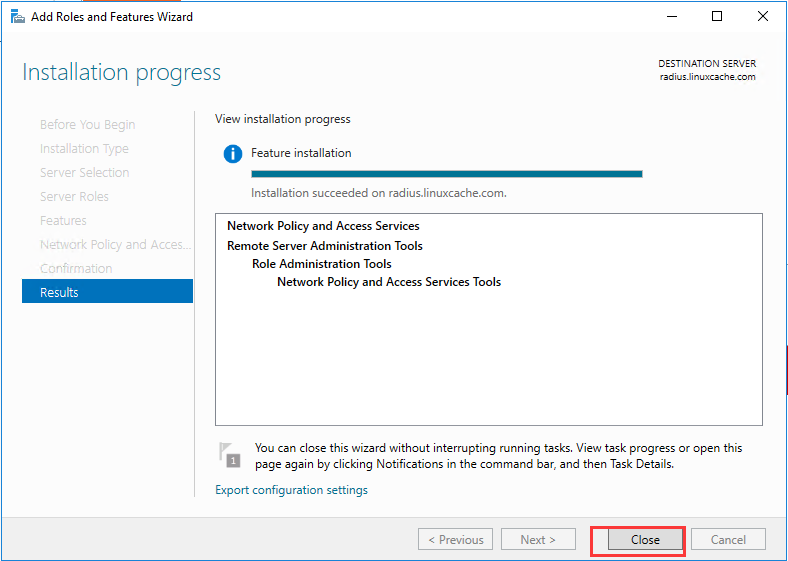

添加角色

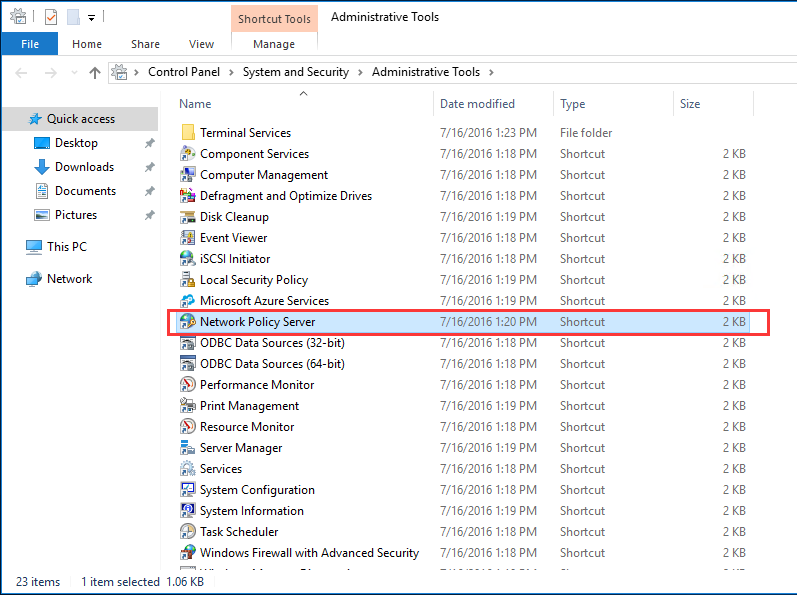

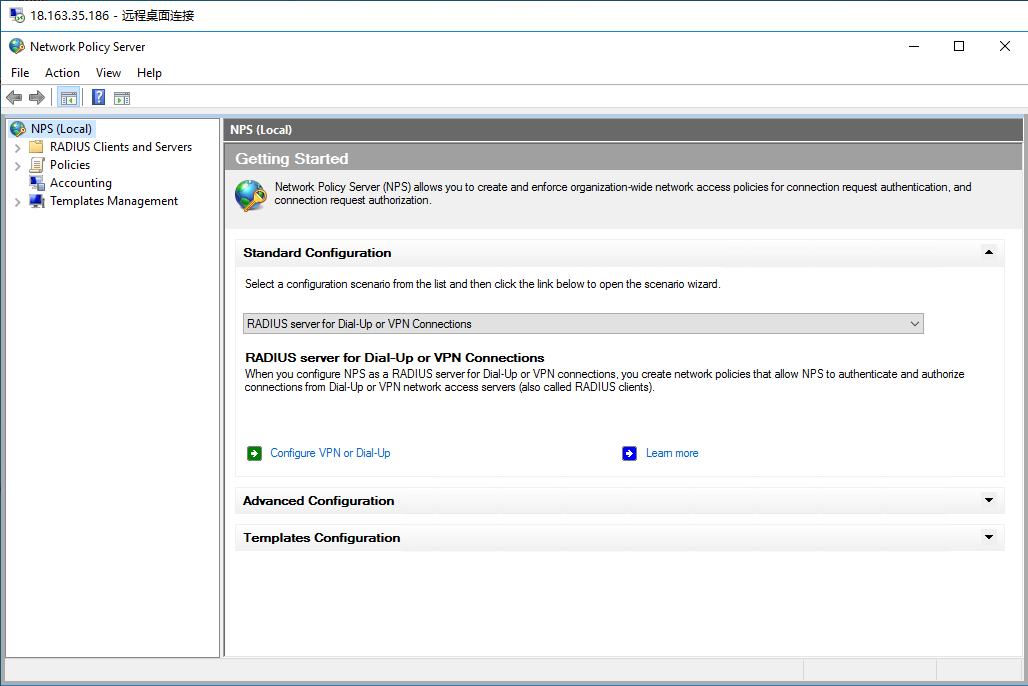

启动并配置NPS服务

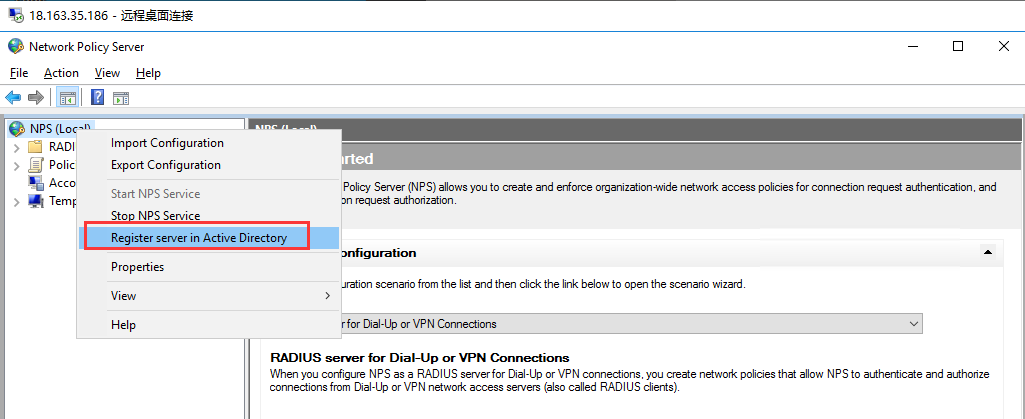

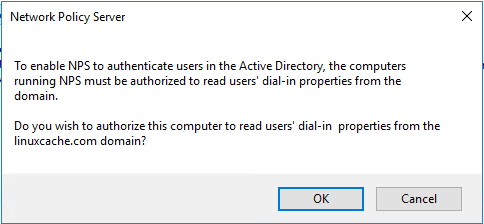

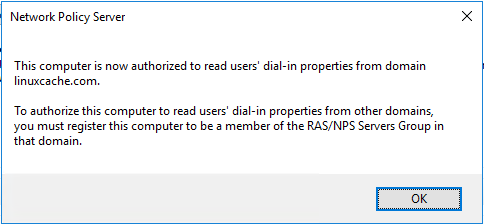

将NPS注册到活动目录域服务

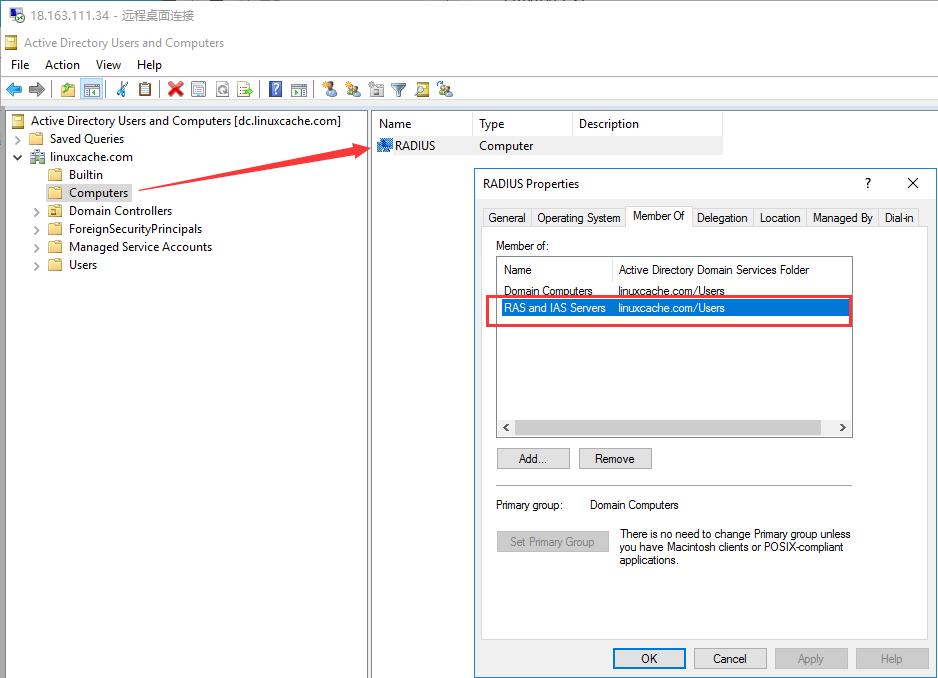

在活动目录中查看已加入域的计算机RADIUS所属成员组

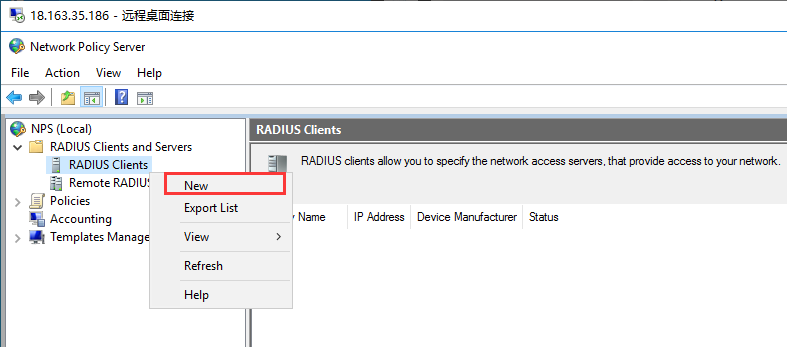

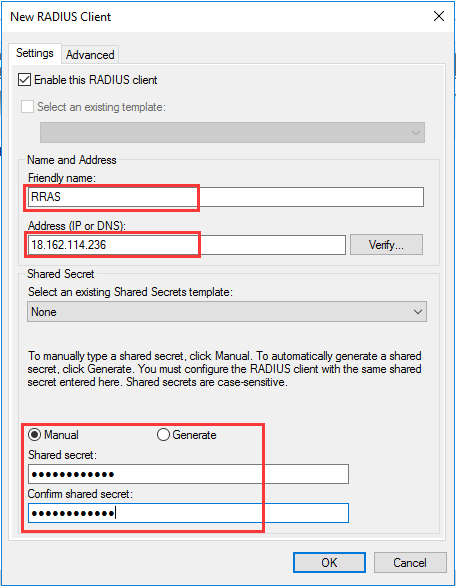

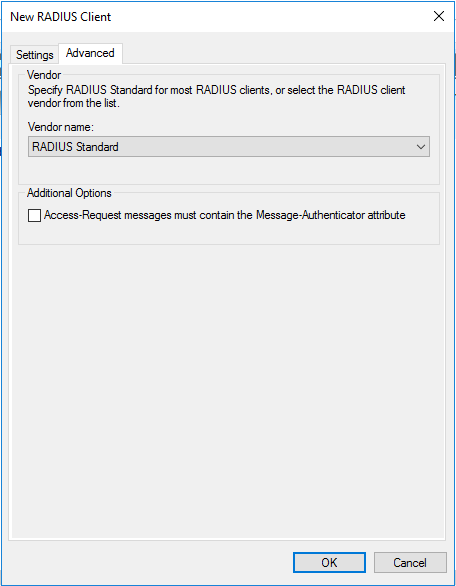

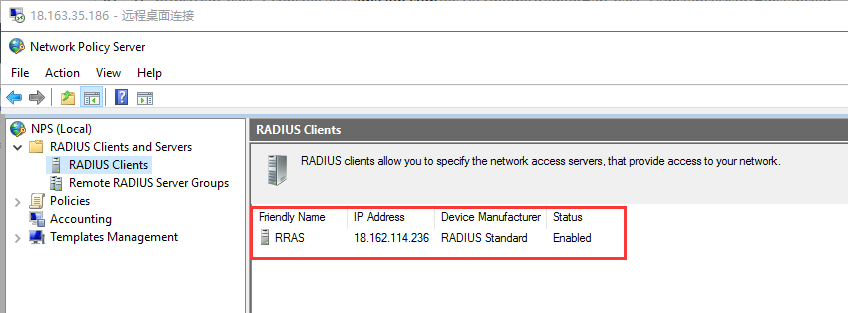

配置RADIUS客户端

重启NPS服务

禁用SElinux设置

[root@ip-172-31-37-47 ~]# sudo setenforce 0 [root@ip-172-31-37-47 ~]# sudo sed -i 's/^SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config

使用Zmodem传输JDK安装文件

[root@ip-172-31-37-47 ~]# yum -y install lrzsz [root@ip-172-31-37-47 ~]# rz rz waiting to receive. Starting zmodem transfer. Press Ctrl+C to cancel. Transferring jdk-8u241-linux-x64.rpm... 100% 174745 KB 417 KB/sec 00:06:59 0 Errors [root@ip-172-31-37-47 ~]#

安装Java运行环境

[root@ip-172-31-37-47 ~]# yum -y install jdk-8u241-linux-x64.rpm [root@ip-172-31-37-47 ~]# java -version java version "1.8.0_241" Java(TM) SE Runtime Environment (build 1.8.0_241-b07) Java HotSpot(TM) 64-Bit Server VM (build 25.241-b07, mixed mode) [root@ip-172-31-37-47 ~]#

添加非特权用户

[root@ip-172-31-37-47 ~]# adduser hadoop [root@ip-172-31-37-47 ~]# passwd hadoop Changing password for user hadoop. New password: Retype new password: passwd: all authentication tokens updated successfully. [root@ip-172-31-37-47 ~]#

切换用户并生成密钥

[root@ip-172-31-37-47 ~]# su - hadoop [hadoop@ip-172-31-37-47 ~]$ ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa Generating public/private rsa key pair. Created directory '/home/hadoop/.ssh'. Your identification has been saved in /home/hadoop/.ssh/id_rsa. Your public key has been saved in /home/hadoop/.ssh/id_rsa.pub. The key fingerprint is: SHA256:Ji2ZgPoh744id3m6UkIqNzAShPYKLagwVtnulKJWepA hadoop@ip-172-31-37-47.ap-east-1.compute.internal The key's randomart image is: +---[RSA 2048]----+ |o. o | |o. + . | |oo= o . | |XEo+ = + | |OBB + = S | |+O+o.. + | |o.++ . | |ooo o . | |+oooo+ | +----[SHA256]-----+ [hadoop@ip-172-31-37-47 ~]$ cat .ssh/id_rsa.pub >> .ssh/authorized_keys [hadoop@ip-172-31-37-47 ~]$ chmod 0600 .ssh/authorized_keys [hadoop@ip-172-31-37-47 ~]$

下载hadoop最新版本二进制包

[hadoop@ip-172-31-37-47 ~]$ curl -O https://mirrors.ustc.edu.cn/apache/hadoop/common/hadoop-3.1.3/hadoop-3.1.3.tar.gz % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 100 322M 100 322M 0 0 8877k 0 0:00:37 0:00:37 --:--:-- 16.8M [hadoop@ip-172-31-37-47 ~]$

解压缩并设置软链接

[hadoop@ip-172-31-37-47 ~]$ tar xzf hadoop-3.1.3.tar.gz [hadoop@ip-172-31-37-47 ~]$ ln -s hadoop-3.1.3 hadoop [hadoop@ip-172-31-37-47 ~]$

编辑当前用户环境变量配置

[hadoop@ip-172-31-37-47 ~]$ vi .bashrc export HADOOP_HOME=/home/hadoop/hadoop export HADOOP_INSTALL=$HADOOP_HOME export HADOOP_MAPRED_HOME=$HADOOP_HOME export HADOOP_COMMON_HOME=$HADOOP_HOME export HADOOP_HDFS_HOME=$HADOOP_HOME export YARN_HOME=$HADOOP_HOME export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

使环境变量生效

[hadoop@ip-172-31-37-47 ~]$ source .bashrc [hadoop@ip-172-31-37-47 ~]$

设置hadoop配置文件中的JAVA_HOME路径

进入配置文件目录并修改配置文件

[hadoop@ip-172-31-37-47 ~]$ cd hadoop/etc/hadoop/ [hadoop@ip-172-31-37-47 hadoop]$ ls capacity-scheduler.xml httpfs-log4j.properties mapred-site.xml configuration.xsl httpfs-signature.secret shellprofile.d container-executor.cfg httpfs-site.xml ssl-client.xml.example core-site.xml kms-acls.xml ssl-server.xml.example hadoop-env.cmd kms-env.sh user_ec_policies.xml.template hadoop-env.sh kms-log4j.properties workers hadoop-metrics2.properties kms-site.xml yarn-env.cmd hadoop-policy.xml log4j.properties yarn-env.sh hadoop-user-functions.sh.example mapred-env.cmd yarnservice-log4j.properties hdfs-site.xml mapred-env.sh yarn-site.xml httpfs-env.sh mapred-queues.xml.template [hadoop@ip-172-31-37-47 hadoop]$ [hadoop@ip-172-31-37-47 hadoop]$ vi hadoop-env.sh # The java implementation to use. By default, this environment # variable is REQUIRED on ALL platforms except OS X! # export JAVA_HOME= export JAVA_HOME=/usr/java/jdk1.8.0_241-amd64 [hadoop@ip-172-31-37-47 hadoop]$ vi core-site.xml <configuration> <property> <name>fs.default.name</name> <value>hdfs://localhost:9000</value> </property> </configuration> [hadoop@ip-172-31-37-47 hadoop]$ vi hdfs-site.xml <configuration> <property> <name>dfs.replication</name> <value>1</value> </property> <property> <name>dfs.name.dir</name> <value>file:///home/hadoop/hadoopdata/hdfs/namenode</value> </property> <property> <name>dfs.data.dir</name> <value>file:///home/hadoop/hadoopdata/hdfs/datanode</value> </property> </configuration> [hadoop@ip-172-31-37-47 hadoop]$ vi yarn-site.xml <configuration> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> </configuration>

对Namenode进行格式化

[hadoop@ip-172-31-37-47 ~]$ hdfs namenode -format WARNING: /home/hadoop/hadoop/logs does not exist. Creating. 2020-03-06 03:49:28,538 INFO namenode.NameNode: STARTUP_MSG: /************************************************************ STARTUP_MSG: Starting NameNode STARTUP_MSG: host = ip-172-31-37-47.ap-east-1.compute.internal/172.31.37.47 STARTUP_MSG: args = [-format] STARTUP_MSG: version = 3.1.3 STARTUP_MSG: classpath = /home/hadoop/hadoop/etc/hadoop:/home/hadoop/hadoop/share/hadoop/common/lib/accessors-smart-1.2.jar:/home/hadoop/hadoop/share/hadoop/common/lib/animal-sniffer-annotations-1.17.jar:/home/hadoop/hadoop/share/hadoop/common/lib/asm-5.0.4.jar:/home/hadoop/hadoop/share/hadoop/common/lib/audience-annotations-0.5.0.jar:/home/hadoop/hadoop/share/hadoop/common/lib/avro-1.7.7.jar:/home/hadoop/hadoop/share/hadoop/common/lib/checker-qual-2.5.2.jar:/home/hadoop/hadoop/share/hadoop/common/lib/commons-beanutils-1.9.3.jar:/home/hadoop/hadoop/share/hadoop/common/lib/commons-cli-1.2.jar:/home/hadoop/hadoop/share/hadoop/common/lib/commons-codec-1.11.jar:/home/hadoop/hadoop/share/hadoop/common/lib/commons-collections-3.2.2.jar:/home/hadoop/hadoop/share/hadoop/common/lib/commons-compress-1.18.jar:/home/hadoop/hadoop/share/hadoop/common/lib/commons-configuration2-2.1.1.jar:/home/hadoop/hadoop/share/hadoop/common/lib/commons-io-2.5.jar:/home/hadoop/hadoop/share/hadoop/common/lib/commons-lang-2.6.jar:/home/hadoop/hadoop/share/hadoop/common/lib/commons-lang3-3.4.jar:/home/hadoop/hadoop/share/hadoop/common/lib/commons-logging-1.1.3.jar:/home/hadoop/hadoop/share/hadoop/common/lib/commons-math3-3.1.1.jar:/home/hadoop/hadoop/share/hadoop/common/lib/commons-net-3.6.jar:/home/hadoop/hadoop/share/hadoop/common/lib/curator-client-2.13.0.jar:/home/hadoop/hadoop/share/hadoop/common/lib/curator-framework-2.13.0.jar:/home/hadoop/hadoop/share/hadoop/common/lib/curator-recipes-2.13.0.jar:/home/hadoop/hadoop/share/hadoop/common/lib/error_prone_annotations-2.2.0.jar:/home/hadoop/hadoop/share/hadoop/common/lib/failureaccess-1.0.jar:/home/hadoop/hadoop/share/hadoop/common/lib/gson-2.2.4.jar:/home/hadoop/hadoop/share/hadoop/common/lib/guava-27.0-jre.jar:/home/hadoop/hadoop/share/hadoop/common/lib/hadoop-annotations-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/common/lib/hadoop-auth-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/common/lib/htrace-core4-4.1.0-incubating.jar:/home/hadoop/hadoop/share/hadoop/common/lib/httpclient-4.5.2.jar:/home/hadoop/hadoop/share/hadoop/common/lib/httpcore-4.4.4.jar:/home/hadoop/hadoop/share/hadoop/common/lib/j2objc-annotations-1.1.jar:/home/hadoop/hadoop/share/hadoop/common/lib/jackson-annotations-2.7.8.jar:/home/hadoop/hadoop/share/hadoop/common/lib/jackson-core-2.7.8.jar:/home/hadoop/hadoop/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/home/hadoop/hadoop/share/hadoop/common/lib/jackson-databind-2.7.8.jar:/home/hadoop/hadoop/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/home/hadoop/hadoop/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/home/hadoop/hadoop/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/home/hadoop/hadoop/share/hadoop/common/lib/javax.servlet-api-3.1.0.jar:/home/hadoop/hadoop/share/hadoop/common/lib/jaxb-api-2.2.11.jar:/home/hadoop/hadoop/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/home/hadoop/hadoop/share/hadoop/common/lib/jcip-annotations-1.0-1.jar:/home/hadoop/hadoop/share/hadoop/common/lib/jersey-core-1.19.jar:/home/hadoop/hadoop/share/hadoop/common/lib/jersey-json-1.19.jar:/home/hadoop/hadoop/share/hadoop/common/lib/jersey-server-1.19.jar:/home/hadoop/hadoop/share/hadoop/common/lib/jersey-servlet-1.19.jar:/home/hadoop/hadoop/share/hadoop/common/lib/jettison-1.1.jar:/home/hadoop/hadoop/share/hadoop/common/lib/jetty-http-9.3.24.v20180605.jar:/home/hadoop/hadoop/share/hadoop/common/lib/jetty-io-9.3.24.v20180605.jar:/home/hadoop/hadoop/share/hadoop/common/lib/jetty-security-9.3.24.v20180605.jar:/home/hadoop/hadoop/share/hadoop/common/lib/jetty-server-9.3.24.v20180605.jar:/home/hadoop/hadoop/share/hadoop/common/lib/jetty-servlet-9.3.24.v20180605.jar:/home/hadoop/hadoop/share/hadoop/common/lib/jetty-util-9.3.24.v20180605.jar:/home/hadoop/hadoop/share/hadoop/common/lib/jetty-webapp-9.3.24.v20180605.jar:/home/hadoop/hadoop/share/hadoop/common/lib/jetty-xml-9.3.24.v20180605.jar:/home/hadoop/hadoop/share/hadoop/common/lib/jsch-0.1.54.jar:/home/hadoop/hadoop/share/hadoop/common/lib/json-smart-2.3.jar:/home/hadoop/hadoop/share/hadoop/common/lib/jsp-api-2.1.jar:/home/hadoop/hadoop/share/hadoop/common/lib/jsr305-3.0.0.jar:/home/hadoop/hadoop/share/hadoop/common/lib/jsr311-api-1.1.1.jar:/home/hadoop/hadoop/share/hadoop/common/lib/kerb-admin-1.0.1.jar:/home/hadoop/hadoop/share/hadoop/common/lib/kerb-client-1.0.1.jar:/home/hadoop/hadoop/share/hadoop/common/lib/kerb-common-1.0.1.jar:/home/hadoop/hadoop/share/hadoop/common/lib/kerb-core-1.0.1.jar:/home/hadoop/hadoop/share/hadoop/common/lib/kerb-crypto-1.0.1.jar:/home/hadoop/hadoop/share/hadoop/common/lib/kerb-identity-1.0.1.jar:/home/hadoop/hadoop/share/hadoop/common/lib/kerb-server-1.0.1.jar:/home/hadoop/hadoop/share/hadoop/common/lib/kerb-simplekdc-1.0.1.jar:/home/hadoop/hadoop/share/hadoop/common/lib/kerb-util-1.0.1.jar:/home/hadoop/hadoop/share/hadoop/common/lib/kerby-asn1-1.0.1.jar:/home/hadoop/hadoop/share/hadoop/common/lib/kerby-config-1.0.1.jar:/home/hadoop/hadoop/share/hadoop/common/lib/kerby-pkix-1.0.1.jar:/home/hadoop/hadoop/share/hadoop/common/lib/kerby-util-1.0.1.jar:/home/hadoop/hadoop/share/hadoop/common/lib/kerby-xdr-1.0.1.jar:/home/hadoop/hadoop/share/hadoop/common/lib/listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar:/home/hadoop/hadoop/share/hadoop/common/lib/log4j-1.2.17.jar:/home/hadoop/hadoop/share/hadoop/common/lib/netty-3.10.5.Final.jar:/home/hadoop/hadoop/share/hadoop/common/lib/nimbus-jose-jwt-4.41.1.jar:/home/hadoop/hadoop/share/hadoop/common/lib/paranamer-2.3.jar:/home/hadoop/hadoop/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/home/hadoop/hadoop/share/hadoop/common/lib/re2j-1.1.jar:/home/hadoop/hadoop/share/hadoop/common/lib/slf4j-api-1.7.25.jar:/home/hadoop/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar:/home/hadoop/hadoop/share/hadoop/common/lib/snappy-java-1.0.5.jar:/home/hadoop/hadoop/share/hadoop/common/lib/stax2-api-3.1.4.jar:/home/hadoop/hadoop/share/hadoop/common/lib/token-provider-1.0.1.jar:/home/hadoop/hadoop/share/hadoop/common/lib/woodstox-core-5.0.3.jar:/home/hadoop/hadoop/share/hadoop/common/lib/zookeeper-3.4.13.jar:/home/hadoop/hadoop/share/hadoop/common/lib/jul-to-slf4j-1.7.25.jar:/home/hadoop/hadoop/share/hadoop/common/lib/metrics-core-3.2.4.jar:/home/hadoop/hadoop/share/hadoop/common/hadoop-common-3.1.3-tests.jar:/home/hadoop/hadoop/share/hadoop/common/hadoop-common-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/common/hadoop-nfs-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/common/hadoop-kms-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/hdfs:/home/hadoop/hadoop/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/jetty-util-ajax-9.3.24.v20180605.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/leveldbjni-all-1.8.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/netty-all-4.0.52.Final.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/okhttp-2.7.5.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/okio-1.6.0.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/jersey-servlet-1.19.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/jersey-json-1.19.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/hadoop-auth-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/commons-codec-1.11.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/httpclient-4.5.2.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/httpcore-4.4.4.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/nimbus-jose-jwt-4.41.1.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/jcip-annotations-1.0-1.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/json-smart-2.3.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/accessors-smart-1.2.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/asm-5.0.4.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/zookeeper-3.4.13.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/audience-annotations-0.5.0.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/netty-3.10.5.Final.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/curator-framework-2.13.0.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/curator-client-2.13.0.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/guava-27.0-jre.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/failureaccess-1.0.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/jsr305-3.0.0.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/checker-qual-2.5.2.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/error_prone_annotations-2.2.0.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/j2objc-annotations-1.1.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/animal-sniffer-annotations-1.17.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/kerb-simplekdc-1.0.1.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/kerb-client-1.0.1.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/kerby-config-1.0.1.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/kerb-core-1.0.1.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/kerby-pkix-1.0.1.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/kerby-asn1-1.0.1.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/kerby-util-1.0.1.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/kerb-common-1.0.1.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/kerb-crypto-1.0.1.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/commons-io-2.5.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/kerb-util-1.0.1.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/token-provider-1.0.1.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/kerb-admin-1.0.1.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/kerb-server-1.0.1.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/kerb-identity-1.0.1.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/kerby-xdr-1.0.1.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/jersey-core-1.19.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/jsr311-api-1.1.1.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/jersey-server-1.19.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/javax.servlet-api-3.1.0.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/json-simple-1.1.1.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/jetty-server-9.3.24.v20180605.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/jetty-http-9.3.24.v20180605.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/jetty-util-9.3.24.v20180605.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/jetty-io-9.3.24.v20180605.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/jetty-webapp-9.3.24.v20180605.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/jetty-xml-9.3.24.v20180605.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/jetty-servlet-9.3.24.v20180605.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/jetty-security-9.3.24.v20180605.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/hadoop-annotations-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/commons-math3-3.1.1.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/commons-net-3.6.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/commons-collections-3.2.2.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/jettison-1.1.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/jaxb-impl-2.2.3-1.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/jaxb-api-2.2.11.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/jackson-jaxrs-1.9.13.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/jackson-xc-1.9.13.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/commons-beanutils-1.9.3.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/commons-configuration2-2.1.1.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/commons-lang3-3.4.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/avro-1.7.7.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/paranamer-2.3.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/snappy-java-1.0.5.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/commons-compress-1.18.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/re2j-1.1.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/gson-2.2.4.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/jsch-0.1.54.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/curator-recipes-2.13.0.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/htrace-core4-4.1.0-incubating.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/jackson-databind-2.7.8.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/jackson-annotations-2.7.8.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/jackson-core-2.7.8.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/stax2-api-3.1.4.jar:/home/hadoop/hadoop/share/hadoop/hdfs/lib/woodstox-core-5.0.3.jar:/home/hadoop/hadoop/share/hadoop/hdfs/hadoop-hdfs-3.1.3-tests.jar:/home/hadoop/hadoop/share/hadoop/hdfs/hadoop-hdfs-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/hdfs/hadoop-hdfs-nfs-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/hdfs/hadoop-hdfs-client-3.1.3-tests.jar:/home/hadoop/hadoop/share/hadoop/hdfs/hadoop-hdfs-client-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/hdfs/hadoop-hdfs-native-client-3.1.3-tests.jar:/home/hadoop/hadoop/share/hadoop/hdfs/hadoop-hdfs-native-client-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/hdfs/hadoop-hdfs-rbf-3.1.3-tests.jar:/home/hadoop/hadoop/share/hadoop/hdfs/hadoop-hdfs-rbf-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/hdfs/hadoop-hdfs-httpfs-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/home/hadoop/hadoop/share/hadoop/mapreduce/lib/junit-4.11.jar:/home/hadoop/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-app-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-common-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-core-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.1.3-tests.jar:/home/hadoop/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-nativetask-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-uploader-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/yarn:/home/hadoop/hadoop/share/hadoop/yarn/lib/HikariCP-java7-2.4.12.jar:/home/hadoop/hadoop/share/hadoop/yarn/lib/aopalliance-1.0.jar:/home/hadoop/hadoop/share/hadoop/yarn/lib/dnsjava-2.1.7.jar:/home/hadoop/hadoop/share/hadoop/yarn/lib/ehcache-3.3.1.jar:/home/hadoop/hadoop/share/hadoop/yarn/lib/fst-2.50.jar:/home/hadoop/hadoop/share/hadoop/yarn/lib/geronimo-jcache_1.0_spec-1.0-alpha-1.jar:/home/hadoop/hadoop/share/hadoop/yarn/lib/guice-4.0.jar:/home/hadoop/hadoop/share/hadoop/yarn/lib/guice-servlet-4.0.jar:/home/hadoop/hadoop/share/hadoop/yarn/lib/jackson-jaxrs-base-2.7.8.jar:/home/hadoop/hadoop/share/hadoop/yarn/lib/jackson-jaxrs-json-provider-2.7.8.jar:/home/hadoop/hadoop/share/hadoop/yarn/lib/jackson-module-jaxb-annotations-2.7.8.jar:/home/hadoop/hadoop/share/hadoop/yarn/lib/java-util-1.9.0.jar:/home/hadoop/hadoop/share/hadoop/yarn/lib/javax.inject-1.jar:/home/hadoop/hadoop/share/hadoop/yarn/lib/jersey-client-1.19.jar:/home/hadoop/hadoop/share/hadoop/yarn/lib/jersey-guice-1.19.jar:/home/hadoop/hadoop/share/hadoop/yarn/lib/json-io-2.5.1.jar:/home/hadoop/hadoop/share/hadoop/yarn/lib/metrics-core-3.2.4.jar:/home/hadoop/hadoop/share/hadoop/yarn/lib/mssql-jdbc-6.2.1.jre7.jar:/home/hadoop/hadoop/share/hadoop/yarn/lib/objenesis-1.0.jar:/home/hadoop/hadoop/share/hadoop/yarn/lib/snakeyaml-1.16.jar:/home/hadoop/hadoop/share/hadoop/yarn/lib/swagger-annotations-1.5.4.jar:/home/hadoop/hadoop/share/hadoop/yarn/hadoop-yarn-api-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/yarn/hadoop-yarn-client-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/yarn/hadoop-yarn-common-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/yarn/hadoop-yarn-registry-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/yarn/hadoop-yarn-server-common-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/yarn/hadoop-yarn-server-nodemanager-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/yarn/hadoop-yarn-server-router-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/yarn/hadoop-yarn-server-sharedcachemanager-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/yarn/hadoop-yarn-server-tests-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/yarn/hadoop-yarn-server-timeline-pluginstorage-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/yarn/hadoop-yarn-server-web-proxy-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/yarn/hadoop-yarn-services-api-3.1.3.jar:/home/hadoop/hadoop/share/hadoop/yarn/hadoop-yarn-services-core-3.1.3.jar STARTUP_MSG: build = https://gitbox.apache.org/repos/asf/hadoop.git -r ba631c436b806728f8ec2f54ab1e289526c90579; compiled by 'ztang' on 2019-09-12T02:47Z STARTUP_MSG: java = 1.8.0_241 ************************************************************/ 2020-03-06 03:49:28,545 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT] 2020-03-06 03:49:28,629 INFO namenode.NameNode: createNameNode [-format] 2020-03-06 03:49:28,725 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable Formatting using clusterid: CID-e8b28488-ea5a-40b3-8ba7-f6e4c14d0e0f 2020-03-06 03:49:29,071 INFO namenode.FSEditLog: Edit logging is async:true 2020-03-06 03:49:29,082 INFO namenode.FSNamesystem: KeyProvider: null 2020-03-06 03:49:29,083 INFO namenode.FSNamesystem: fsLock is fair: true 2020-03-06 03:49:29,083 INFO namenode.FSNamesystem: Detailed lock hold time metrics enabled: false 2020-03-06 03:49:29,086 INFO namenode.FSNamesystem: fsOwner = hadoop (auth:SIMPLE) 2020-03-06 03:49:29,087 INFO namenode.FSNamesystem: supergroup = supergroup 2020-03-06 03:49:29,087 INFO namenode.FSNamesystem: isPermissionEnabled = true 2020-03-06 03:49:29,087 INFO namenode.FSNamesystem: HA Enabled: false 2020-03-06 03:49:29,120 INFO common.Util: dfs.datanode.fileio.profiling.sampling.percentage set to 0. Disabling file IO profiling 2020-03-06 03:49:29,128 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit: configured=1000, counted=60, effected=1000 2020-03-06 03:49:29,128 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true 2020-03-06 03:49:29,131 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000 2020-03-06 03:49:29,134 INFO blockmanagement.BlockManager: The block deletion will start around 2020 Mar 06 03:49:29 2020-03-06 03:49:29,135 INFO util.GSet: Computing capacity for map BlocksMap 2020-03-06 03:49:29,135 INFO util.GSet: VM type = 64-bit 2020-03-06 03:49:29,136 INFO util.GSet: 2.0% max memory 3.4 GB = 69.8 MB 2020-03-06 03:49:29,136 INFO util.GSet: capacity = 2^23 = 8388608 entries 2020-03-06 03:49:29,151 INFO blockmanagement.BlockManager: dfs.block.access.token.enable = false 2020-03-06 03:49:29,156 INFO Configuration.deprecation: No unit for dfs.namenode.safemode.extension(30000) assuming MILLISECONDS 2020-03-06 03:49:29,156 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.threshold-pct = 0.9990000128746033 2020-03-06 03:49:29,156 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.min.datanodes = 0 2020-03-06 03:49:29,156 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.extension = 30000 2020-03-06 03:49:29,157 INFO blockmanagement.BlockManager: defaultReplication = 1 2020-03-06 03:49:29,157 INFO blockmanagement.BlockManager: maxReplication = 512 2020-03-06 03:49:29,157 INFO blockmanagement.BlockManager: minReplication = 1 2020-03-06 03:49:29,157 INFO blockmanagement.BlockManager: maxReplicationStreams = 2 2020-03-06 03:49:29,157 INFO blockmanagement.BlockManager: redundancyRecheckInterval = 3000ms 2020-03-06 03:49:29,157 INFO blockmanagement.BlockManager: encryptDataTransfer = false 2020-03-06 03:49:29,157 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000 2020-03-06 03:49:29,199 INFO namenode.FSDirectory: GLOBAL serial map: bits=24 maxEntries=16777215 2020-03-06 03:49:29,212 INFO util.GSet: Computing capacity for map INodeMap 2020-03-06 03:49:29,212 INFO util.GSet: VM type = 64-bit 2020-03-06 03:49:29,212 INFO util.GSet: 1.0% max memory 3.4 GB = 34.9 MB 2020-03-06 03:49:29,212 INFO util.GSet: capacity = 2^22 = 4194304 entries 2020-03-06 03:49:29,214 INFO namenode.FSDirectory: ACLs enabled? false 2020-03-06 03:49:29,214 INFO namenode.FSDirectory: POSIX ACL inheritance enabled? true 2020-03-06 03:49:29,214 INFO namenode.FSDirectory: XAttrs enabled? true 2020-03-06 03:49:29,214 INFO namenode.NameNode: Caching file names occurring more than 10 times 2020-03-06 03:49:29,218 INFO snapshot.SnapshotManager: Loaded config captureOpenFiles: false, skipCaptureAccessTimeOnlyChange: false, snapshotDiffAllowSnapRootDescendant: true, maxSnapshotLimit: 65536 2020-03-06 03:49:29,219 INFO snapshot.SnapshotManager: SkipList is disabled 2020-03-06 03:49:29,222 INFO util.GSet: Computing capacity for map cachedBlocks 2020-03-06 03:49:29,222 INFO util.GSet: VM type = 64-bit 2020-03-06 03:49:29,222 INFO util.GSet: 0.25% max memory 3.4 GB = 8.7 MB 2020-03-06 03:49:29,222 INFO util.GSet: capacity = 2^20 = 1048576 entries 2020-03-06 03:49:29,253 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10 2020-03-06 03:49:29,253 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10 2020-03-06 03:49:29,253 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25 2020-03-06 03:49:29,257 INFO namenode.FSNamesystem: Retry cache on namenode is enabled 2020-03-06 03:49:29,257 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis 2020-03-06 03:49:29,258 INFO util.GSet: Computing capacity for map NameNodeRetryCache 2020-03-06 03:49:29,258 INFO util.GSet: VM type = 64-bit 2020-03-06 03:49:29,259 INFO util.GSet: 0.029999999329447746% max memory 3.4 GB = 1.0 MB 2020-03-06 03:49:29,259 INFO util.GSet: capacity = 2^17 = 131072 entries 2020-03-06 03:49:29,279 INFO namenode.FSImage: Allocated new BlockPoolId: BP-1445179826-172.31.37.47-1583466569274 2020-03-06 03:49:29,289 INFO common.Storage: Storage directory /home/hadoop/hadoopdata/hdfs/namenode has been successfully formatted. 2020-03-06 03:49:29,310 INFO namenode.FSImageFormatProtobuf: Saving image file /home/hadoop/hadoopdata/hdfs/namenode/current/fsimage.ckpt_0000000000000000000 using no compression 2020-03-06 03:49:29,386 INFO namenode.FSImageFormatProtobuf: Image file /home/hadoop/hadoopdata/hdfs/namenode/current/fsimage.ckpt_0000000000000000000 of size 393 bytes saved in 0 seconds . 2020-03-06 03:49:29,395 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0 2020-03-06 03:49:29,399 INFO namenode.FSImage: FSImageSaver clean checkpoint: txid = 0 when meet shutdown. 2020-03-06 03:49:29,399 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at ip-172-31-37-47.ap-east-1.compute.internal/172.31.37.47 ************************************************************/ [hadoop@ip-172-31-37-47 ~]$

启动hadoop集群

[hadoop@ip-172-31-37-47 sbin]$ ./start-dfs.sh Starting namenodes on [localhost] Starting datanodes Starting secondary namenodes [ip-172-31-37-47.ap-east-1.compute.internal] ip-172-31-37-47.ap-east-1.compute.internal: Warning: Permanently added 'ip-172-31-37-47.ap-east-1.compute.internal,172.31.37.47' (ECDSA) to the list of known hosts. 2020-03-06 03:50:47,021 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable [hadoop@ip-172-31-37-47 sbin]$ [hadoop@ip-172-31-37-47 sbin]$ ./start-yarn.sh Starting resourcemanager Starting nodemanagers [hadoop@ip-172-31-37-47 sbin]$

查看端口监听

[hadoop@ip-172-31-37-47 ~]$ netstat -lntp (Not all processes could be identified, non-owned process info will not be shown, you would have to be root to see it all.) Active Internet connections (only servers) Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 0.0.0.0:8040 0.0.0.0:* LISTEN 15150/java tcp 0 0 0.0.0.0:9864 0.0.0.0:* LISTEN 14583/java tcp 0 0 127.0.0.1:9000 0.0.0.0:* LISTEN 14436/java tcp 0 0 0.0.0.0:8042 0.0.0.0:* LISTEN 15150/java tcp 0 0 0.0.0.0:9866 0.0.0.0:* LISTEN 14583/java tcp 0 0 0.0.0.0:41963 0.0.0.0:* LISTEN 15150/java tcp 0 0 0.0.0.0:9867 0.0.0.0:* LISTEN 14583/java tcp 0 0 0.0.0.0:9868 0.0.0.0:* LISTEN 14777/java tcp 0 0 0.0.0.0:9870 0.0.0.0:* LISTEN 14436/java tcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN - tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN - tcp 0 0 127.0.0.1:35031 0.0.0.0:* LISTEN 14583/java tcp 0 0 0.0.0.0:8088 0.0.0.0:* LISTEN 15026/java tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN - tcp 0 0 0.0.0.0:13562 0.0.0.0:* LISTEN 15150/java tcp 0 0 0.0.0.0:8030 0.0.0.0:* LISTEN 15026/java tcp 0 0 0.0.0.0:8031 0.0.0.0:* LISTEN 15026/java tcp 0 0 0.0.0.0:8032 0.0.0.0:* LISTEN 15026/java tcp 0 0 0.0.0.0:8033 0.0.0.0:* LISTEN 15026/java tcp6 0 0 :::111 :::* LISTEN - tcp6 0 0 :::22 :::* LISTEN - tcp6 0 0 ::1:25 :::* LISTEN - [hadoop@ip-172-31-37-47 ~]$

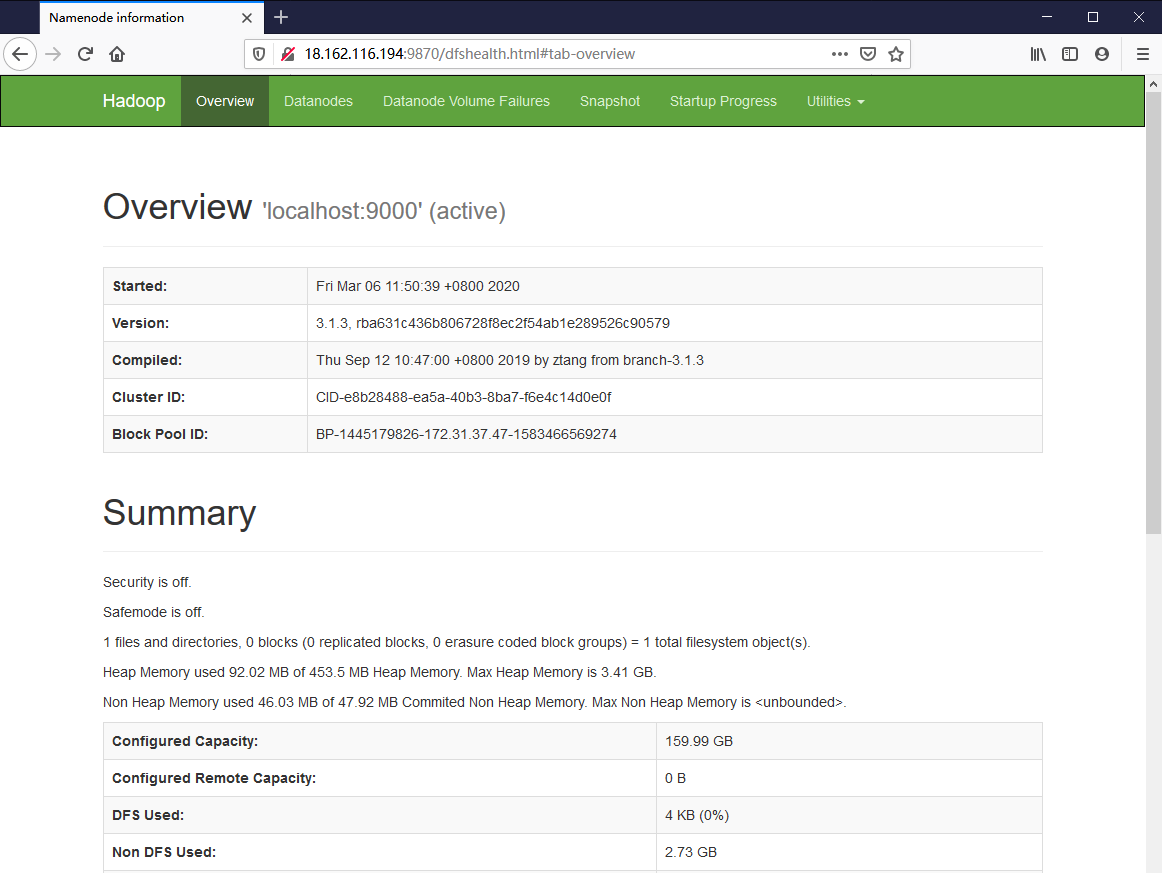

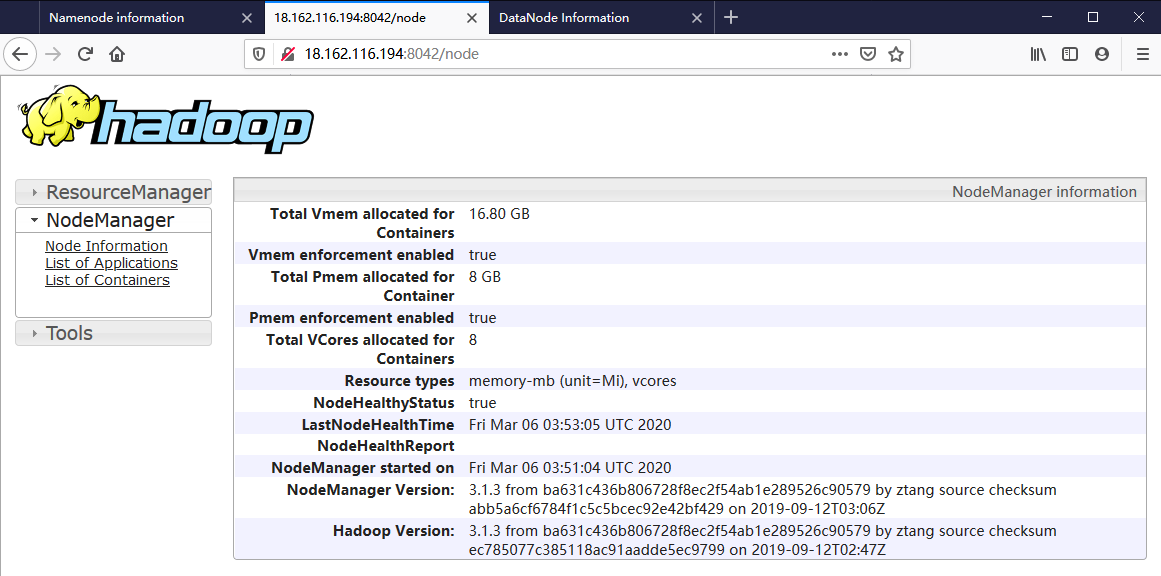

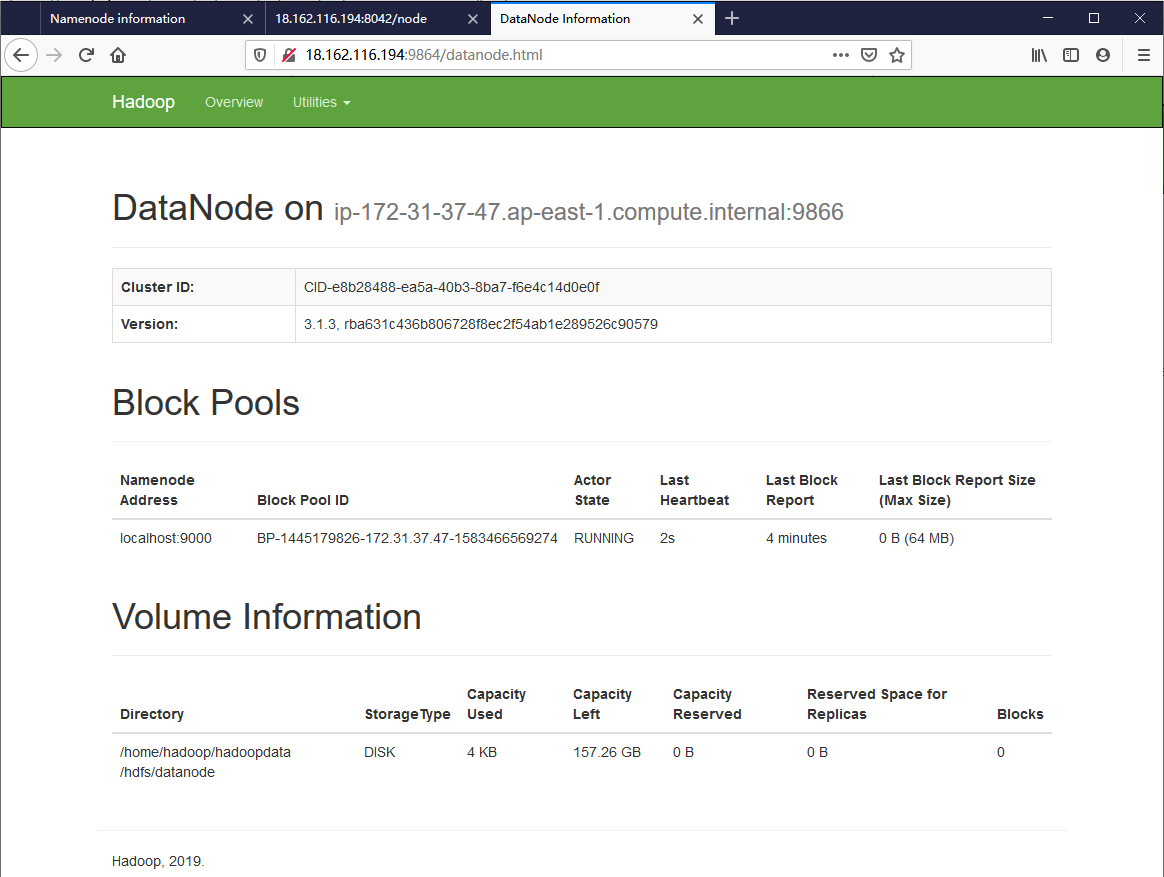

在浏览器中访问Hadoop服务Web控制台

查看Namenode状态

查看集群状态

查看节点详情

Generic Routing Encapsulation 通用路由封装协议

主机列表

18.163.50.194/172.31.44.248 18.162.60.60/172.31.37.49

查找系统可用的内核模块

[centos@ip-172-31-44-248 ~]$ ls -alRUv /lib/modules/$(uname -r)/kernel |grep ip_gre -rw-r--r--. 1 root root 9396 Nov 29 2018 ip_gre.ko.xz [centos@ip-172-31-44-248 ~]$

加载ip_gre模块

[root@ip-172-31-44-248 ~]# modprobe ip_gre [root@ip-172-31-44-248 ~]# [root@ip-172-31-37-49 ~]# modprobe ip_gre [root@ip-172-31-37-49 ~]#

新增tun0网卡配置

本端隧道地址192.168.192.1 对端隧道地址192.168.192.2 [root@ip-172-31-44-248 ~]# vi /etc/sysconfig/network-scripts/ifcfg-tun0 DEVICE=tun0 BOOTPROTO=none ONBOOT=yes DEVICETYPE=tunnel TYPE=GRE PEER_INNER_IPADDR=192.168.192.2 PEER_OUTER_IPADDR=18.162.60.60 MY_INNER_IPADDR=192.168.192.1

启用tun0网卡

[root@ip-172-31-44-248 ~]# ifup tun0

查看接口信息

[root@ip-172-31-44-248 ~]# ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc mq state UP group default qlen 1000 link/ether 0e:84:f5:b0:db:f6 brd ff:ff:ff:ff:ff:ff inet 172.31.44.248/20 brd 172.31.47.255 scope global dynamic ens5 valid_lft 2667sec preferred_lft 2667sec inet6 fe80::c84:f5ff:feb0:dbf6/64 scope link valid_lft forever preferred_lft forever 3: gre0@NONE: <NOARP> mtu 1476 qdisc noop state DOWN group default qlen 1000 link/gre 0.0.0.0 brd 0.0.0.0 4: gretap0@NONE: <BROADCAST,MULTICAST> mtu 1462 qdisc noop state DOWN group default qlen 1000 link/ether 00:00:00:00:00:00 brd ff:ff:ff:ff:ff:ff 5: tun0@NONE: <POINTOPOINT,NOARP,UP,LOWER_UP> mtu 8977 qdisc noqueue state UNKNOWN group default qlen 1000 link/gre 0.0.0.0 peer 18.162.60.60 inet 192.168.192.1 peer 192.168.192.2/32 scope global tun0 valid_lft forever preferred_lft forever [root@ip-172-31-44-248 ~]#

新增tun0网卡配置

本端隧道地址192.168.192.2 对端隧道地址192.168.192.1 [root@ip-172-31-37-49 ~]# vi /etc/sysconfig/network-scripts/ifcfg-tun0 DEVICE=tun0 BOOTPROTO=none ONBOOT=yes DEVICETYPE=tunnel TYPE=GRE PEER_INNER_IPADDR=192.168.192.1 PEER_OUTER_IPADDR=18.163.50.194 MY_INNER_IPADDR=192.168.192.2

启用tun0网卡

[root@ip-172-31-37-49 ~]# ifup tun0

查看接口信息

[root@ip-172-31-37-49 ~]# ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc mq state UP group default qlen 1000 link/ether 0e:4a:2b:48:b8:aa brd ff:ff:ff:ff:ff:ff inet 172.31.37.49/20 brd 172.31.47.255 scope global dynamic ens5 valid_lft 2692sec preferred_lft 2692sec inet6 fe80::c4a:2bff:fe48:b8aa/64 scope link valid_lft forever preferred_lft forever 3: gre0@NONE: <NOARP> mtu 1476 qdisc noop state DOWN group default qlen 1000 link/gre 0.0.0.0 brd 0.0.0.0 4: gretap0@NONE: <BROADCAST,MULTICAST> mtu 1462 qdisc noop state DOWN group default qlen 1000 link/ether 00:00:00:00:00:00 brd ff:ff:ff:ff:ff:ff 5: tun0@NONE: <POINTOPOINT,NOARP,UP,LOWER_UP> mtu 8977 qdisc noqueue state UNKNOWN group default qlen 1000 link/gre 0.0.0.0 peer 18.163.50.194 inet 192.168.192.2 peer 192.168.192.1/32 scope global tun0 valid_lft forever preferred_lft forever [root@ip-172-31-37-49 ~]#

分别使用对端IP地址进行ping测试

[root@ip-172-31-37-49 ~]# ping -c 4 192.168.192.1 PING 192.168.192.1 (192.168.192.1) 56(84) bytes of data. 64 bytes from 192.168.192.1: icmp_seq=1 ttl=64 time=0.297 ms 64 bytes from 192.168.192.1: icmp_seq=2 ttl=64 time=0.283 ms 64 bytes from 192.168.192.1: icmp_seq=3 ttl=64 time=0.237 ms 64 bytes from 192.168.192.1: icmp_seq=4 ttl=64 time=0.268 ms --- 192.168.192.1 ping statistics --- 4 packets transmitted, 4 received, 0% packet loss, time 3000ms rtt min/avg/max/mdev = 0.237/0.271/0.297/0.025 ms [root@ip-172-31-37-49 ~]# [root@ip-172-31-44-248 ~]# ping -c 4 192.168.192.2 PING 192.168.192.2 (192.168.192.2) 56(84) bytes of data. 64 bytes from 192.168.192.2: icmp_seq=1 ttl=64 time=0.249 ms 64 bytes from 192.168.192.2: icmp_seq=2 ttl=64 time=0.279 ms 64 bytes from 192.168.192.2: icmp_seq=3 ttl=64 time=0.196 ms 64 bytes from 192.168.192.2: icmp_seq=4 ttl=64 time=0.214 ms --- 192.168.192.2 ping statistics --- 4 packets transmitted, 4 received, 0% packet loss, time 2999ms rtt min/avg/max/mdev = 0.196/0.234/0.279/0.035 ms [root@ip-172-31-44-248 ~]#