PS C:\Users\harveymei> robocopy /?

-------------------------------------------------------------------------------

ROBOCOPY :: Windows 的可靠文件复制

-------------------------------------------------------------------------------

开始时间: 2023年12月4日 16:55:28

用法 :: ROBOCOPY source destination [file [file]...] [options]

源 :: 源目录(驱动器:\路径或\\服务器\共享\路径)。

目标 :: 目标目录(驱动器:\路径或\\服务器\共享\路径)。

文件 :: 要复制的文件(名称/通配符: 默认为 "*.*")。

::

:: 复制选项:

::

/S :: 复制子目录,但不复制空的子目录。

/E :: 复制子目录,包括空的子目录。

/LEV:n :: 仅复制源目录树的前 n 层。

/Z :: 在可重新启动模式下复制文件。

/B :: 在备份模式下复制文件。

/ZB :: 使用可重新启动模式;如果拒绝访问,请使用备份模式。

/J :: 复制时使用未缓冲的 I/O (推荐在复制大文件时使用)。

/EFSRAW :: 在 EFS RAW 模式下复制所有加密的文件。

/COPY:复制标记:: 要复制的文件内容(默认为 /COPY:DAT)。

(复制标志:D=Data、A=Attributes、T=Timestamps、X=Skip alt 数据流(如果 /B 或 /ZB 则忽略 X)。

(S=安全=NTFS ACL,O=所有者信息,U=审核信息)。

/SEC :: 复制具有安全性的文件(等同于 /COPY:DATS)。

/COPYALL :: 复制所有文件信息(等同于 /COPY:DATSOU)。

/NOCOPY :: 不复制任何文件信息(与 /PURGE 一起使用)。

/SECFIX :: 修复所有文件的文件安全性,即使是跳过的文件。

/TIMFIX :: 修复所有文件的文件时间,即使是跳过的文件。

/PURGE :: 删除源中不再存在的目标文件/目录。

/MIR :: 镜像目录树(等同于 /E 加 /PURGE)。

/MOV :: 移动文件(复制后从源中删除)。

/MOVE :: 移动文件和目录(复制后从源中删除)。

/A+:[RASHCNET] :: 将给定的属性添加到复制的文件。

/A-:[RASHCNETO]:: 从复制的文件中删除给定的属性。

/CREATE :: 仅创建目录树和长度为零的文件。

/FAT :: 仅使用 8.3 FAT 文件名创建目标文件。

/256 :: 关闭超长路径(> 256 个字符)支持。

/MON:n :: 监视源;发现多于 n 个更改时再次运行。

/MOT:m :: 监视源;如果更改,在 m 分钟时间后再次运行。

/RH:hhmm-hhmm :: 可以启动新的复制时运行的小时数 - 时间。

/PF :: 基于每个文件(而不是每个步骤)来检查运行小时数。

/IPG:n :: 程序包间的间距(ms),以释放低速线路上的带宽。

/SJ:: 将接合复制为接合而非接合目标。

/SL:: 将符号链接复制为链接而非链接目标。

/MT[:n] :: 使用 n 个线程进行多线程复制(默认值为 8)。

n 必须至少为 1,但不得大于 128。

该选项与 /IPG 和 /EFSRAW 选项不兼容。

使用 /LOG 选项重定向输出以便获得最佳性能。

/DCOPY:复制标记:: 要复制的目录内容(默认为 /DCOPY:DA)。

(copyflag: D=数据,A=属性,T=时间戳,E=EA,X=跳过替换数据流)。

/NODCOPY :: 不复制任何目录信息(默认情况下,执行 /DCOPY:DA)。

/NOOFFLOAD :: 在不使用 Windows 复制卸载机制的情况下复制文件。

/COMPRESS :: 在文件传输期间请求网络压缩(如果适用)。

/SPARSE :: 复制期间启用保留稀疏状态

::

:: 复制文件限制选项 :

::

/IoMaxSize:n[KMG] :: 每个{read,write}循环请求的 I/O 的最大大小(n [KMG]字节)。

/IoRate: n[KMG] :: 请求的 I/O 速率 ( n [KMG] 字节/秒)。

/Threshold:n[KMG] :: 阻止的文件大小阈值,以 n [KMG] 个字节为单位 (请参阅备注)。

::

:: 文件选择选项:

::

/A :: 仅复制具有存档属性集的文件。

/M :: 仅复制具有存档属性的文件并重置存档属性。

/IA:[RASHCNETO] :: 仅包含具有任意给定属性集的文件。

/XA:[RASHCNETO] :: 排除具有任意给定属性集的文件。

/XF 文件[文件]... :: 排除与给定名称/路径/通配符匹配的文件。

/XD 目录[目录]... :: 排除与给定名称/路径匹配的目录。

/XC :: 排除已更改的文件。

/XN :: 排除较新的文件。

/XO :: 排除较旧的文件。

/XX :: 排除多余的文件和目录。

/XL :: 排除孤立的文件和目录。

/IS :: 包含相同文件。

/IT :: 包含已调整的文件。

/MAX:n :: 最大的文件大小 - 排除大于 n 字节的文件。

/MIN:n :: 最小的文件大小 - 排除小于 n 字节的文件。

/MAXAGE:n :: 最长的文件存在时间 - 排除早于 n 天/日期的文件。

/MINAGE:n :: 最短的文件存在时间 - 排除晚于 n 天/日期的文件。

/MAXLAD:n :: 最大的最后访问日期 - 排除自 n 以来未使用的文件。

/MINLAD:n :: 最小的最后访问日期 - 排除自 n 以来使用的文件。

(If n < 1900 then n = n days, else n = YYYYMMDD date)。

/FFT :: 假设 FAT 文件时间(2 秒粒度)。

/DST :: 弥补 1 小时的 DST 时间差。

/XJ:: 排除(文件和目录的)符号链接和接合点。

/XJD:: 排除目录和接合点的符号链接。

/XJF :: 排除文件的符号链接。

/IM :: 包含已修改的文件(更改时间不同)。

::

:: 重试选项:

::

/R:n :: 失败副本的重试次数: 默认为 1 百万。

/W:n :: 两次重试间的等待时间: 默认为 30 秒。

/REG :: 将注册表中的 /R:n 和 /W:n 保存为默认设置。

/TBD :: 等待定义共享名称(重试错误 67)。

/LFSM :: 在低可用空间模式下运行,启用复制暂停和继续(参见“备注”)。

/LFSM:n[KMG] :: /LFSM,指定下限大小 (n[K:kilo, M:mega, G:giga] 字节)。

::

:: 日志记录选项:

::

/L :: 仅列出 - 不复制、添加时间戳或删除任何文件。

/X :: 报告所有多余的文件,而不只是选中的文件。

/V :: 生成详细输出,同时显示跳过的文件。

/TS :: 在输出中包含源文件的时间戳。

/FP :: 在输出中包含文件的完整路径名称。

/BYTES :: 以字节打印大小。

/NS :: 无大小 - 不记录文件大小。

/NC :: 无类别 - 不记录文件类别。

/NFL :: 无文件列表 - 不记录文件名。

/NDL :: 无目录列表 - 不记录目录名称。

/NP :: 无进度 - 不显示已复制的百分比。

/ETA :: 显示复制文件的预期到达时间。

/LOG:文件 :: 将状态输出到日志文件(覆盖现有日志)。

/LOG+:文件 :: 将状态输出到日志文件(附加到现有日志中)。

/UNILOG:文件 :: 以 UNICODE 方式将状态输出到日志文件(覆盖现有日志)。

/UNILOG+:文件 :: 以 UNICODE 方式将状态输出到日志文件(附加到现有日志中)。

/TEE :: 输出到控制台窗口和日志文件。

/NJH :: 没有作业标头。

/NJS :: 没有作业摘要。

/UNICODE :: 以 UNICODE 方式输出状态。

::

:: 作业选项 :

::

/JOB:作业名称 :: 从命名的作业文件中提取参数。

/SAVE:作业名称 :: 将参数保存到命名的作业文件

/QUIT :: 处理命令行后退出(以查看参数)。

/NOSD :: 未指定源目录。

/NODD :: 未指定目标目录。

/IF :: 包含以下文件。

::

:: 备注:

::

以前在卷的根目录上使用 /PURGE 或 /MIR 导致

robocopy 也对“系统卷信息”目录内的

文件应用所请求的操作。现在不再是这种情形;如果

指定了任何一项,则 robocopy 将跳过

复制会话简要源目录和目标目录中具有该名称的任何文件或目录。

已修改的文件分类仅在源

和目标文件系统支持更改时间戳(例如 NTFS)

以及源和目标文件具有不同的更改时间(否则相同)

时才适用。默认情况下不复制这些文件;指定 /IM

以包含它们。

/DCOPY:E 标志请求扩展属性复制应该

针对目录进行尝试。请注意,如果目录的 EA 无法复制,

则当前 robocopy 将继续。/COPYALL 中也未包括

在 /COPYALL 中。

如果指定了 /IoMaxSize 或 /IoRate,则 robocopy 将启用

复制文件限制 (目的是减少系统负载)。

两者都可以调整为允许值或最佳值;亦即,两者

指定所需的复制参数,但系统和 robocopy

允许根据需要将其调整为合理的/允许的值。

如果还使用了/Threshold,它将为文件大小指定最小值以

参与限制;低于该大小的文件将不会受到限制。

所有这三个参数的值后面都能加上可选后缀

字符(从集 [KMG](K, M, G)中)。

使用 /LFSM 请求 robocopy 在“低可用空间模式”下运行。

在该模式下,robocopy 会暂停(每当文件副本导致)

目标卷的可用空间低于可以

由 LFSM:n[KMG] 形式的标志明确指定的“下限”值时,robocopy 将会暂停。

如果指定了 /LFSM,但没有显式下限值,则会将下限设置为

目标卷大小的百分之十。

低可用空间模式与 /MT 和 /EFSRAW不兼容。

PS C:\Users\harveymei>

共享目录设置(注册表)备份和恢复

HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\LanmanServer\Shares

访问控制列表(权限)备份和恢复

PS C:\Users\harveymei> icacls

ICACLS name /save aclfile [/T] [/C] [/L] [/Q]

将匹配名称的文件和文件夹的 DACL 存储到 aclfile 中

以便将来与 /restore 一起使用。请注意,未保存 SACL、

所有者或完整性标签。

ICACLS directory [/substitute SidOld SidNew [...]] /restore aclfile

[/C] [/L] [/Q]

将存储的 DACL 应用于目录中的文件。

ICACLS name /setowner user [/T] [/C] [/L] [/Q]

更改所有匹配名称的所有者。该选项不会强制更改所有

身份;使用 takeown.exe 实用程序可实现

该目的。

ICACLS name /findsid Sid [/T] [/C] [/L] [/Q]

查找包含显式提及 SID 的 ACL 的

所有匹配名称。

ICACLS name /verify [/T] [/C] [/L] [/Q]

查找其 ACL 不规范或长度与 ACE

计数不一致的所有文件。

ICACLS name /reset [/T] [/C] [/L] [/Q]

为所有匹配文件使用默认继承的 ACL 替换 ACL。

ICACLS name [/grant[:r] Sid:perm[...]]

[/deny Sid:perm [...]]

[/remove[:g|:d]] Sid[...]] [/T] [/C] [/L] [/Q]

[/setintegritylevel Level:policy[...]]

/grant[:r] Sid:perm 授予指定的用户访问权限。如果使用 :r,

这些权限将替换以前授予的所有显式权限。

如果不使用 :r,这些权限将添加到以前授予的

所有显式权限。

/deny Sid:perm 显式拒绝指定的用户访问权限。

将为列出的权限添加显式拒绝 ACE,

并删除所有显式授予的权限中的相同权限。

/remove[:[g|d]] Sid 删除 ACL 中所有出现的 SID。使用

:g,将删除授予该 SID 的所有权限。使用

:d,将删除拒绝该 SID 的所有权限。

/setintegritylevel [(CI)(OI)]级别将完整性 ACE 显式

添加到所有匹配文件。要指定的级别为以下级别

之一:

L[ow]

M[edium]

H[igh]

完整性 ACE 的继承选项可以优先于级别,但只应用于

目录。

/inheritance:e|d|r

e - 启用继承

d - 禁用继承并复制 ACE

r - 删除所有继承的 ACE

注意:

Sid 可以采用数字格式或友好的名称格式。如果给定数字格式,

那么请在 SID 的开头添加一个 *。

/T 指示在以该名称指定的目录下的所有匹配文件/目录上

执行此操作。

/C 指示此操作将在所有文件错误上继续进行。

仍将显示错误消息。

/L 指示此操作在符号

链接本身而不是其目标上执行。

/Q 指示 icacls 应该禁止显示成功消息。

ICACLS 保留 ACE 项的规范顺序:

显式拒绝

显式授予

继承的拒绝

继承的授予

perm 是权限掩码,可以指定两种格式之一:

简单权限序列:

N - 无访问权限

F - 完全访问权限

M - 修改权限

RX - 读取和执行权限

R - 只读权限

W - 只写权限

D - 删除权限

在括号中以逗号分隔的特定权限列表:

DE - 删除

RC - 读取控制

WDAC - 写入 DAC

WO - 写入所有者

S - 同步

AS - 访问系统安全性

MA - 允许的最大值

GR - 一般性读取

GW - 一般性写入

GE - 一般性执行

GA - 全为一般性

RD - 读取数据/列出目录

WD - 写入数据/添加文件

AD - 附加数据/添加子目录

REA - 读取扩展属性

WEA - 写入扩展属性

X - 执行/遍历

DC - 删除子项

RA - 读取属性

WA - 写入属性

继承权限可以优先于每种格式,但只应用于

目录:

(OI) - 对象继承

(CI) - 容器继承

(IO) - 仅继承

(NP) - 不传播继承

(I) - 从父容器继承的权限

示例:

icacls c:\windows\* /save AclFile /T

- 将 c:\windows 及其子目录下所有文件的

ACL 保存到 AclFile。

icacls c:\windows\ /restore AclFile

- 将还原 c:\windows 及其子目录下存在的 AclFile 内

所有文件的 ACL。

icacls file /grant Administrator:(D,WDAC)

- 将授予用户对文件删除和写入 DAC 的管理员

权限。

icacls file /grant *S-1-1-0:(D,WDAC)

- 将授予由 sid S-1-1-0 定义的用户对文件删除和

写入 DAC 的权限。

PS C:\Users\harveymei>

内容引用:

https://learn.microsoft.com/zh-cn/windows-server/administration/windows-commands/icacls

https://learn.microsoft.com/en-us/windows/win32/secauthz/dacls-and-aces

https://learn.microsoft.com/zh-cn/troubleshoot/windows-client/networking/saving-restoring-existing-windows-shares

https://learn.microsoft.com/en-us/windows-server/identity/ad-ds/manage/understand-security-identifiers

查看当前启用仓库列表并禁用仓库

[ops@localhost ~]$ sudo dnf repolist [sudo] password for ops: repo id repo name appstream Rocky Linux 9 - AppStream baseos Rocky Linux 9 - BaseOS extras Rocky Linux 9 - Extras [ops@localhost ~]$ sudo dnf config-manager --disable appstream [ops@localhost ~]$ sudo dnf config-manager --disable baseos [ops@localhost ~]$ sudo dnf config-manager --disable extras [ops@localhost ~]$

检查可用性

[ops@localhost ~]$ sudo dnf makecache There are no enabled repositories in "/etc/yum.repos.d", "/etc/yum/repos.d", "/etc/distro.repos.d". [ops@localhost ~]$

错误提示

Guest agent is not responding: QEMU guest agent is not connected

CODE_FILE

../src/qemu/qemu_domain.c

CODE_FUNC

qemuDomainAgentAvailable

CODE_LINE

8526

LIBVIRT_CODE

86

LIBVIRT_DOMAIN

10

LIBVIRT_SOURCE

util.error

PRIORITY

3

SYSLOG_FACILITY

3

_BOOT_ID

e8d41ada1ec94052900e15bd6cabd727

_CAP_EFFECTIVE

1ffffffffff

_CMDLINE

/usr/sbin/virtqemud --timeout 120

_COMM

virtqemud

_EXE

/usr/sbin/virtqemud

_GID

0

_HOSTNAME

localhost.localdomain

_MACHINE_ID

760e98b720374a9087311a1aea584dc6

_PID

4865

_RUNTIME_SCOPE

system

_SELINUX_CONTEXT

kernel

_SOURCE_REALTIME_TIMESTAMP

1700126716143085

_SYSTEMD_CGROUP

/system.slice/virtqemud.service

_SYSTEMD_INVOCATION_ID

d8c1cb44eba54d2788eb6492ef418a46

_SYSTEMD_SLICE

system.slice

_SYSTEMD_UNIT

virtqemud.service

_TRANSPORT

journal

_UID

0

__CURSOR

s=3e22457506824768ad4f57aee3165252;i=ed1;b=e8d41ada1ec94052900e15bd6cabd727;m=13f550484;t=60a4198fd2603;x=5ee0a5b5940edc90

__MONOTONIC_TIMESTAMP

5357503620

__REALTIME_TIMESTAMP

1700126716143107

虚拟机安装代理客户端

[ops@localhost ~]$ sudo dnf info qemu-guest-agent

Last metadata expiration check: 0:00:15 ago on Thu 16 Nov 2023 05:34:49 PM HKT.

Available Packages

Name : qemu-guest-agent

Epoch : 17

Version : 7.2.0

Release : 14.el9_2.5

Architecture : x86_64

Size : 446 k

Source : qemu-kvm-7.2.0-14.el9_2.5.src.rpm

Repository : appstream

Summary : QEMU guest agent

URL : http://www.qemu.org/

License : GPLv2 and GPLv2+ and CC-BY

Description : qemu-kvm is an open source virtualizer that provides hardware emulation for

: the KVM hypervisor.

:

: This package provides an agent to run inside guests, which communicates

: with the host over a virtio-serial channel named "org.qemu.guest_agent.0"

:

: This package does not need to be installed on the host OS.

[ops@localhost ~]$

[ops@localhost ~]$ sudo dnf install qemu-guest-agent Last metadata expiration check: 0:02:19 ago on Thu 16 Nov 2023 05:34:49 PM HKT. Dependencies resolved. ================================================================================ Package Arch Version Repository Size ================================================================================ Installing: qemu-guest-agent x86_64 17:7.2.0-14.el9_2.5 appstream 446 k Transaction Summary ================================================================================ Install 1 Package Total download size: 446 k Installed size: 1.8 M Is this ok [y/N]:

服务注册

[ops@localhost ~]$ sudo systemctl enable qemu-guest-agent

Unit /usr/lib/systemd/system/qemu-guest-agent.service is added as a dependency to a non-existent unit dev-virtio\x2dports-org.qemu.guest_agent.0.device.

[ops@localhost ~]$ sudo systemctl start qemu-guest-agent

[ops@localhost ~]$ sudo systemctl status qemu-guest-agent

● qemu-guest-agent.service - QEMU Guest Agent

Loaded: loaded (/usr/lib/systemd/system/qemu-guest-agent.service; enabled;>

Active: active (running) since Thu 2023-11-16 17:41:24 HKT; 10s ago

Main PID: 14117 (qemu-ga)

Tasks: 2 (limit: 7887)

Memory: 2.4M

CPU: 9ms

CGroup: /system.slice/qemu-guest-agent.service

└─14117 /usr/bin/qemu-ga --method=virtio-serial --path=/dev/virtio>

Nov 16 17:41:24 localhost.localdomain systemd[1]: Started QEMU Guest Agent.

[ops@localhost ~]$

在主机上验证可用性

[ops@localhost ~]$ sudo virsh qemu-agent-command 1stvm '{"execute":"guest-info"}'

[sudo] password for ops:

{"return":{"version":"7.2.0","supported_commands":[{"enabled":true,"name":"guest-get-cpustats","success-response":true},{"enabled":true,"name":"guest-get-diskstats","success-response":true},{"enabled":true,"name":"guest-ssh-remove-authorized-keys","success-response":true},{"enabled":true,"name":"guest-ssh-add-authorized-keys","success-response":true},{"enabled":true,"name":"guest-ssh-get-authorized-keys","success-response":true},{"enabled":false,"name":"guest-get-devices","success-response":true},{"enabled":true,"name":"guest-get-osinfo","success-response":true},{"enabled":true,"name":"guest-get-timezone","success-response":true},{"enabled":true,"name":"guest-get-users","success-response":true},{"enabled":true,"name":"guest-get-host-name","success-response":true},{"enabled":false,"name":"guest-exec","success-response":true},{"enabled":false,"name":"guest-exec-status","success-response":true},{"enabled":true,"name":"guest-get-memory-block-info","success-response":true},{"enabled":true,"name":"guest-set-memory-blocks","success-response":true},{"enabled":true,"name":"guest-get-memory-blocks","success-response":true},{"enabled":true,"name":"guest-set-user-password","success-response":true},{"enabled":true,"name":"guest-get-fsinfo","success-response":true},{"enabled":true,"name":"guest-get-disks","success-response":true},{"enabled":true,"name":"guest-set-vcpus","success-response":true},{"enabled":true,"name":"guest-get-vcpus","success-response":true},{"enabled":true,"name":"guest-network-get-interfaces","success-response":true},{"enabled":true,"name":"guest-suspend-hybrid","success-response":false},{"enabled":true,"name":"guest-suspend-ram","success-response":false},{"enabled":true,"name":"guest-suspend-disk","success-response":false},{"enabled":true,"name":"guest-fstrim","success-response":true},{"enabled":true,"name":"guest-fsfreeze-thaw","success-response":true},{"enabled":true,"name":"guest-fsfreeze-freeze-list","success-response":true},{"enabled":true,"name":"guest-fsfreeze-freeze","success-response":true},{"enabled":true,"name":"guest-fsfreeze-status","success-response":true},{"enabled":false,"name":"guest-file-flush","success-response":true},{"enabled":false,"name":"guest-file-seek","success-response":true},{"enabled":false,"name":"guest-file-write","success-response":true},{"enabled":false,"name":"guest-file-read","success-response":true},{"enabled":false,"name":"guest-file-close","success-response":true},{"enabled":false,"name":"guest-file-open","success-response":true},{"enabled":true,"name":"guest-shutdown","success-response":false},{"enabled":true,"name":"guest-info","success-response":true},{"enabled":true,"name":"guest-set-time","success-response":true},{"enabled":true,"name":"guest-get-time","success-response":true},{"enabled":true,"name":"guest-ping","success-response":true},{"enabled":true,"name":"guest-sync","success-response":true},{"enabled":true,"name":"guest-sync-delimited","success-response":true}]}}

[ops@localhost ~]$

当老板询问您项目和原始计划相比有何进展时,您最不应当说的是“我不知道”。 在项目滚动之前,可以通过设置基线并保存为原始日程的快照来避免这种情况。

如果当前数据似乎从未与基线同步,可能需要仔细查看原始计划。 例如,项目范围可能已更改,或者你所需的资源可能超出最初的想法。 检查项目 利益干系人 ,并考虑使用上述过程设置新的基线。

设置基线后向项目添加任务并更新基线

新增任务并链接任务

更新基线

确认

查看变化

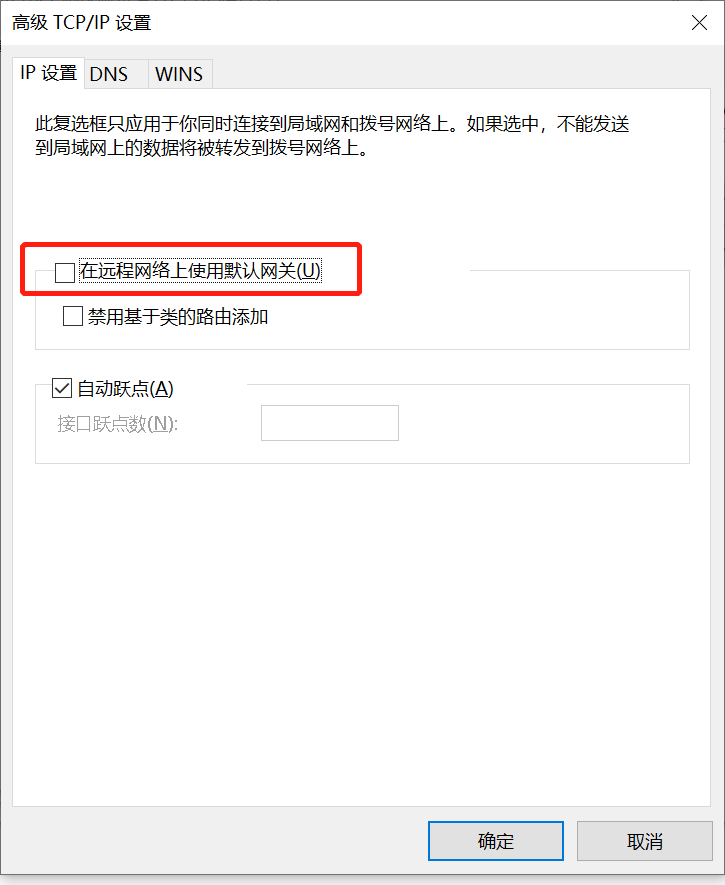

取消勾选后,在拨号连接过程中,不新增一条本地的默认路由(即经由指定网关去往0.0.0.0的路由)。

通过增加工时的方式压缩任务工期

加班工时会分摊在工作日的工时中,并体现在单个任务“工期”的缩短上。(工时增加,工期减少)

工作日的加班,应体现在单个任务的“实际开始时间”、“实际完成时间”和任务条形图上。(工期减少,任务条形图长度变短)

非工作日的加班,应体现在单个任务的“实际开始时间”、“实际完成时间”和任务条形图上。(工期减少,任务条形图长度未变化或变长(周末两天))

项目计划完成时间:7月25日

项目计划压缩工期:1个工作日

加班计划:任务3安排周六加班1个工作日

如果加班是因为应对任务的逾期风险而实施的,加班后按时完成的,则不体现在加班工时上。加班后仍未按时完成的(实际逾期),体现在任务“实际完成时间”上。

单个任务需要分阶段执行时的任务分拆设置

适用于任务在开始执行并首次更新进度后存在暂停执行后继续执行的情况。

基线开始时间:6月27日

基线完成时间:7月5日

工期:7个工作日

实际开始时间:6月27日

当前任务进度:20%

该任务在7月28日起暂停执行,执行任务分拆操作。(工期不变,任务进度百分比按实际比例可能跨拆分后任务条形图)

该任务再次开始执行时,应拖动剩余部分任务条形图至实际开始日期(6月30日)处,已反映后续任务的实际开始时间。(工期不变)

拖动剩余部分任务条形图中进度条形图未覆盖部分至实际开始日期(6月30日)处。(工期改变)

已设置基线的已开始任务在变更基线后的影响

设置基线

基线开始时间:7月5日

基线完成时间:7月11日

工期:5个工作日

设置基线后更新任务

实际开始时间:6月28日

完成百分比:30%

变更基线后(计划变更后再次设置基线)

基线开始时间:6月28日(取自任务当前“开始时间”)

基线完成时间:7月11日(任务当前未完成,即未设置实际完成时间,该基线完成时间取自当前“完成时间”)

工期:5个工作日(不变)

整体变化:

1,当前基线条形图变长。

2,当前任务条形图以设置新基线的时间点为界被截断,分别对齐当前”开始时间“和当前”完成时间“。

Java Web服务以系统非特权用户deployer运行

查看特定系统命令的默认访问控制权限列表

[deployer@s4 ~]$ cd /usr/bin/ [deployer@s4 bin]$ getfacl curl wget scp sftp telnet # file: curl # owner: root # group: root user::rwx group::r-x other::r-x # file: wget # owner: root # group: root user::rwx group::r-x other::r-x # file: scp # owner: root # group: root user::rwx group::r-x other::r-x # file: sftp # owner: root # group: root user::rwx group::r-x other::r-x # file: telnet # owner: root # group: root user::rwx group::r-x other::r-x [deployer@s4 bin]$

禁用用户deployer对特定命令的访问

[root@s4 ~]# cd /usr/bin/ [root@s4 bin]# setfacl -m u:deployer:--- curl wget scp sftp telnet [root@s4 bin]# getfacl curl wget scp sftp telnet # file: curl # owner: root # group: root user::rwx user:deployer:--- group::r-x mask::r-x other::r-x # file: wget # owner: root # group: root user::rwx user:deployer:--- group::r-x mask::r-x other::r-x # file: scp # owner: root # group: root user::rwx user:deployer:--- group::r-x mask::r-x other::r-x # file: sftp # owner: root # group: root user::rwx user:deployer:--- group::r-x mask::r-x other::r-x # file: telnet # owner: root # group: root user::rwx user:deployer:--- group::r-x mask::r-x other::r-x [root@s4 bin]#

使用非特权用deployer执行特定命令的错误提示

[deployer@s4 bin]$ curl -bash: /usr/bin/curl: Permission denied [deployer@s4 bin]$ wget -bash: /usr/bin/wget: Permission denied [deployer@s4 bin]$ scp -bash: /usr/bin/scp: Permission denied [deployer@s4 bin]$ sftp -bash: /usr/bin/sftp: Permission denied [deployer@s4 bin]$ telnet -bash: /usr/bin/telnet: Permission denied [deployer@s4 bin]$

其他可选命令

chmod chown chgrp